Introduction

In this article, you will see an example of a project implementation based on the Transactional outbox, Inbox, and Saga event-driven architecture patterns. The stack of technologies includes Kotlin, Spring Boot 3, JDK 21, virtual threads, GraalVM, PostgreSQL, Kafka, Kafka Connect, Debezium, CloudEvents, Caddy, Docker, and other tools.

In event-driven architecture (EDA), the interaction between services is carried out using events, or messages. A message can contain, for example, an update of some entity state or a command to perform a given action.

Transactional outbox is a microservices architecture design pattern that solves dual writes problem; the problem is that if some business event occurs in an application, we can’t guarantee the atomicity of the corresponding database transaction and sending of an event (to the message broker, queue, another service, etc.), for example:

-

if a service sends the event in the middle of transaction, there is no guarantee that the transaction will commit.

-

if a service sends the event after committing the transaction, there is no guarantee that it won’t crash before sending the message.

To solve the problem, an application doesn’t send an event; instead, the application stores the event in the message outbox. In case of use a relational database where we have

ACID guarantees, the outbox represents an additional table in the application’s database; for other types of databases there can be different solutions, for example, the message

can be stored as an additional property along with changed business entity. In this project, PostgreSQL is used as a database, so an event is inserted into the outbox table as a

part of a transaction that also includes a change of some business data. Atomicity is guaranteed because it is a local ACID transaction. The table acts as a temporary message queue.

Inbox pattern is used to process incoming messages from a queue and operates in the reverse order

when compared with Transactional outbox: a message is first stored in a database (in an additional inbox table), then it is processed by an application at

a convenient pace. Using the pattern, a message won’t be lost in case of an error at processing and can be reprocessed.

When a business transaction spans multiple services, implement it as a Saga, a sequence of local transactions. Each local transaction updates the database and publishes a message to trigger the next local transaction in the saga. If a local transaction fails because it violates a business rule, then the saga executes a series of compensating transactions that undo the changes that were made by the preceding local transactions.

Kafka Connect is a framework for connecting Kafka with external systems such as databases, key-value stores, search indexes, and file systems, using so-called connectors.

Debezium is an open source distributed platform for change data capture (CDC). It converts changes in your databases (inserts, updates, or deletes) into event streams so that your applications can detect and immediately respond to those row-level changes.

CloudEvents is a specification for describing event data in a common way.

The accompanying project is available on GitHub. It represents a microservices platform for a library; its architecture looks as follows:

The project contains the following components that compose the aforementioned platform:

-

three Spring Boot applications and their databases (PostgreSQL):

These services are native (GraalVM) applications running as Docker containers.

-

one Kafka Connect instance with nine Debezium connectors deployed:

-

three connectors to

bookdatabase:-

The connector reads messages intended for

book-servicefrom a Kafka topic and inserts them intobook.public.inboxtable. -

The connector is an end part of user data streaming (replication) from

user.public.library_usertable; it inserts records intobook.public.user_replicatable. It is a counterpart ofuser.source.streamingconnector. -

The connector captures new messages from

book.public.outboxtable by reading WAL file and publishes them to a Kafka topic.

-

-

five connectors to

userdatabase:-

The connector reads messages intended for

user-servicefrom a Kafka topic and inserts them intouser.public.inboxtable. -

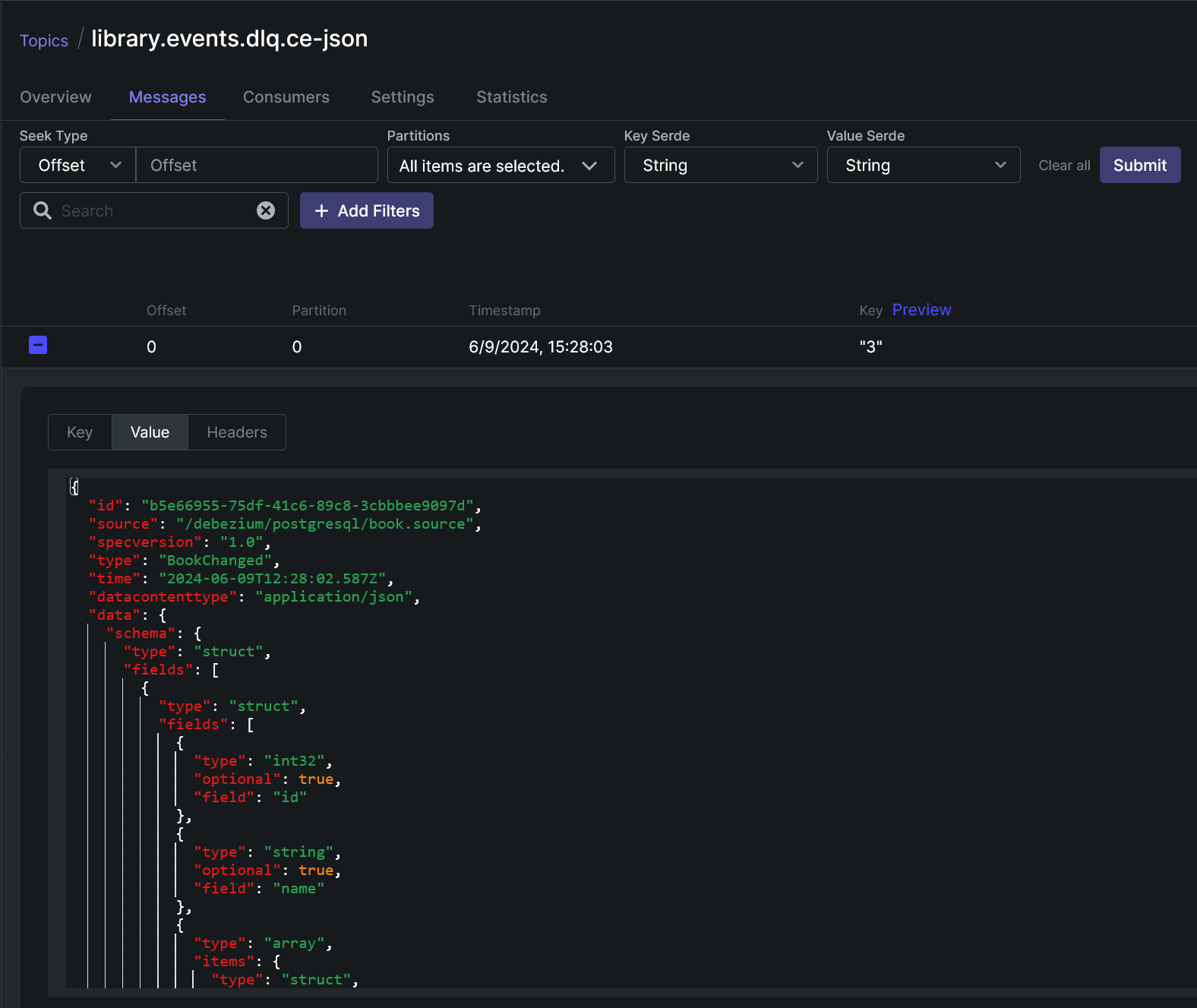

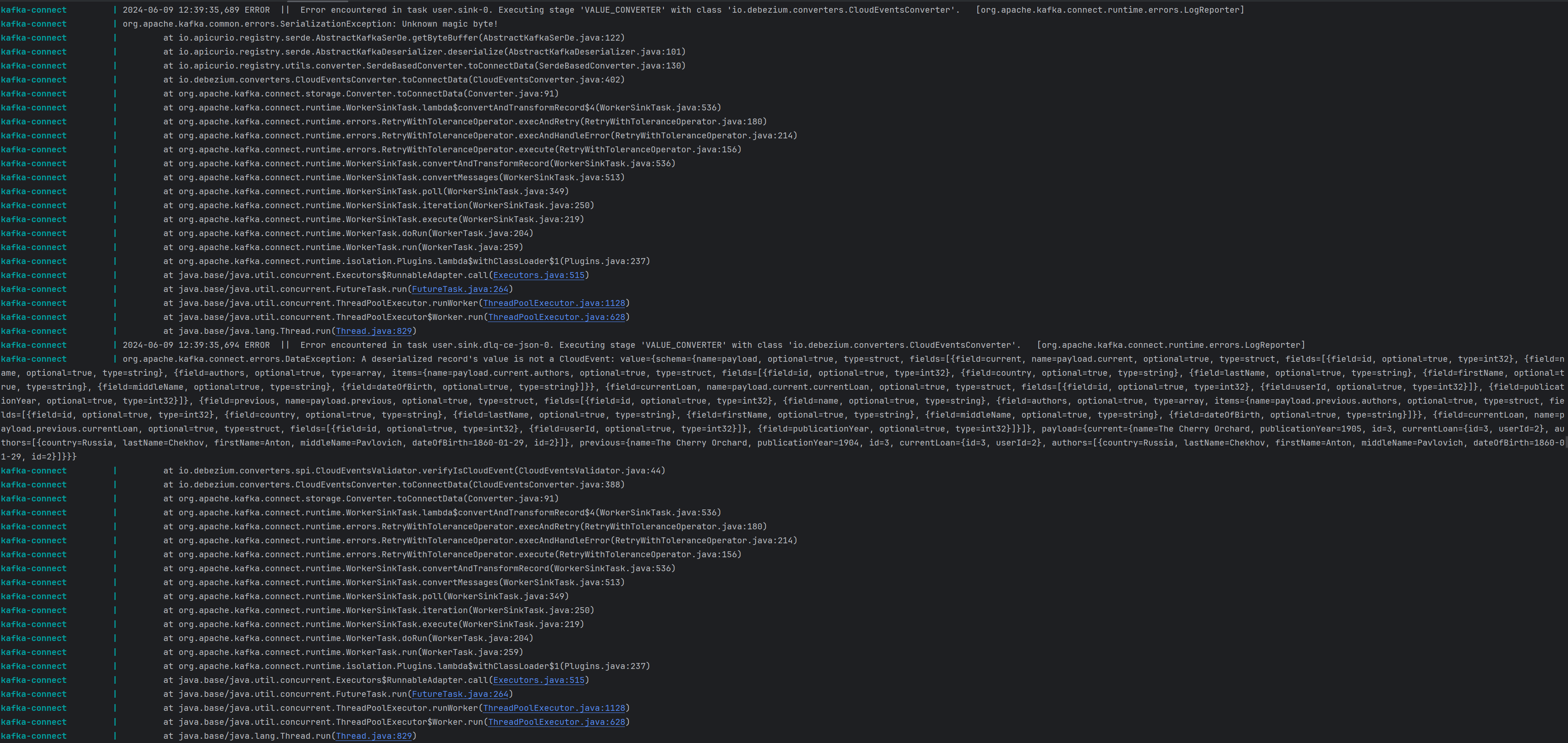

The connector reads messages not processed by

user.sinkconnector from a dead letter queue (Kafka topic) and inserts them intouser.public.inboxtable. -

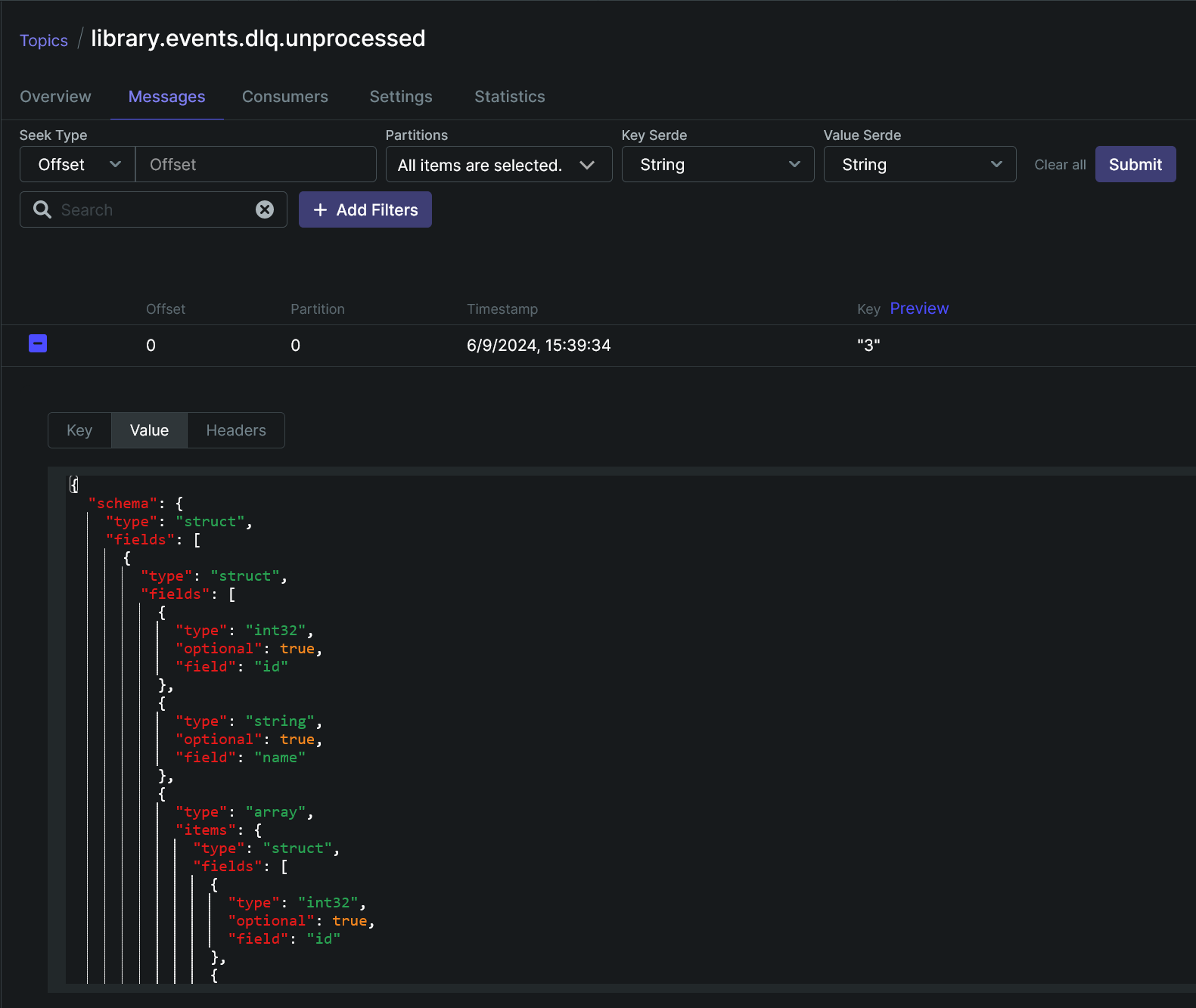

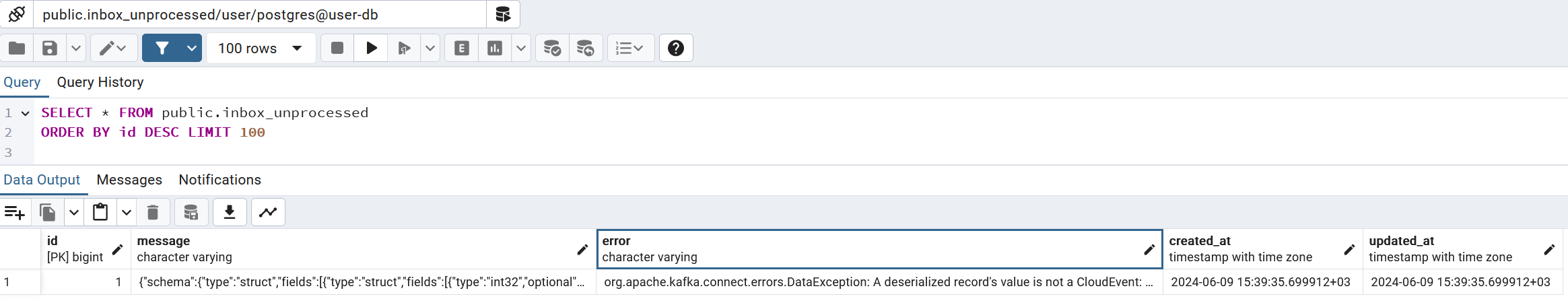

The connector reads messages not processed by

user.sink.dlq-ce-jsonconnector from a dead letter queue (Kafka topic) and inserts them intouser.public.inbox_unprocessedtable. -

The connector captures new messages from

user.public.outboxtable by reading WAL file and publishes them to a Kafka topic. -

The connector is a start part of user data streaming (replication) from

user.public.library_usertable. It is a counterpart ofbook.sink.streaming.

-

-

one connector to

notificationdatabase-

The connector reads messages intended for

notification-servicefrom a Kafka topic and inserts them intonotification.public.inboxtable.

-

-

-

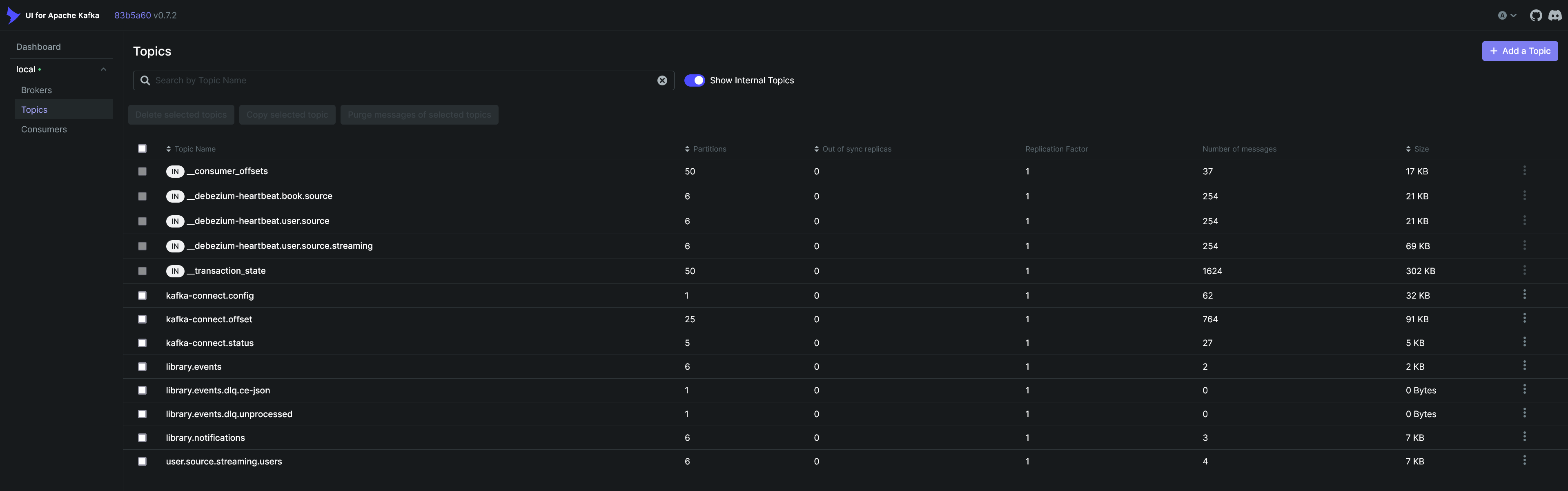

Kafka

It runs in KRaft mode. KRaft is the consensus protocol that was introduced to remove Kafka’s dependency on ZooKeeper for metadata management.

The project runs only one instance of Kafka, as well as Kafka Connect. This is done to simplify configuration, focus on the architecture itself, and reduce resource consumption. To set up clusters for both tools, do your own research.

-

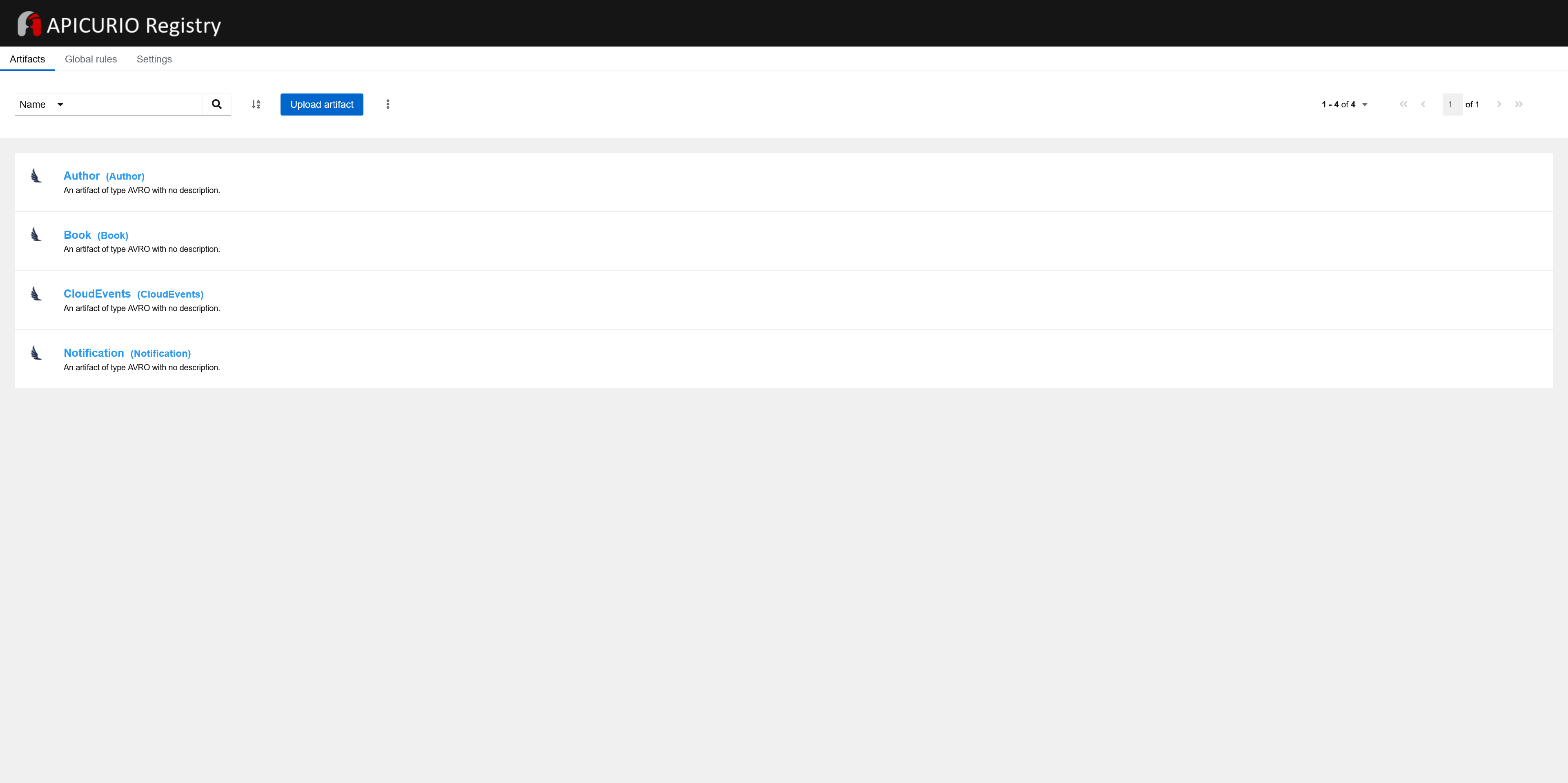

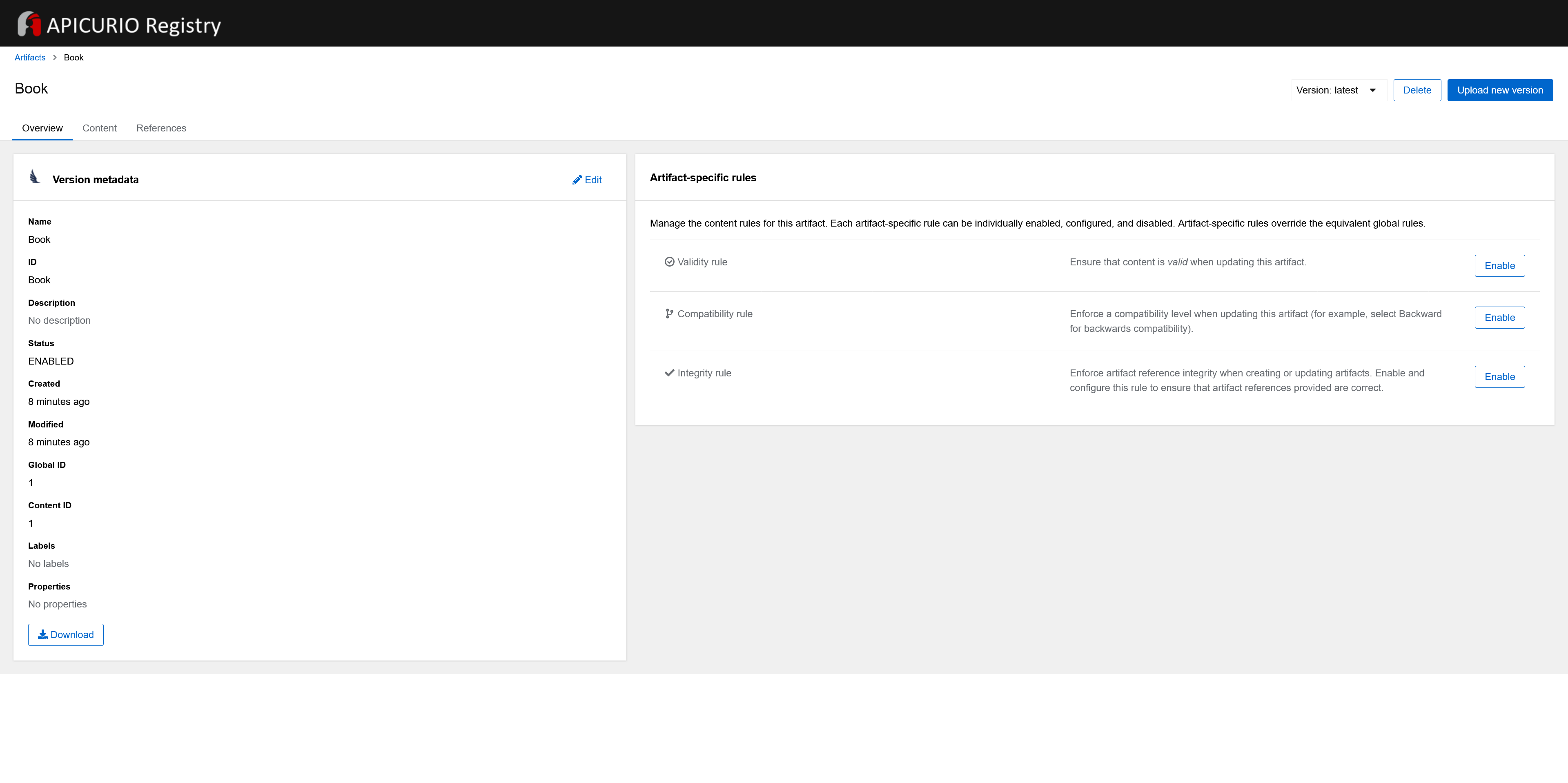

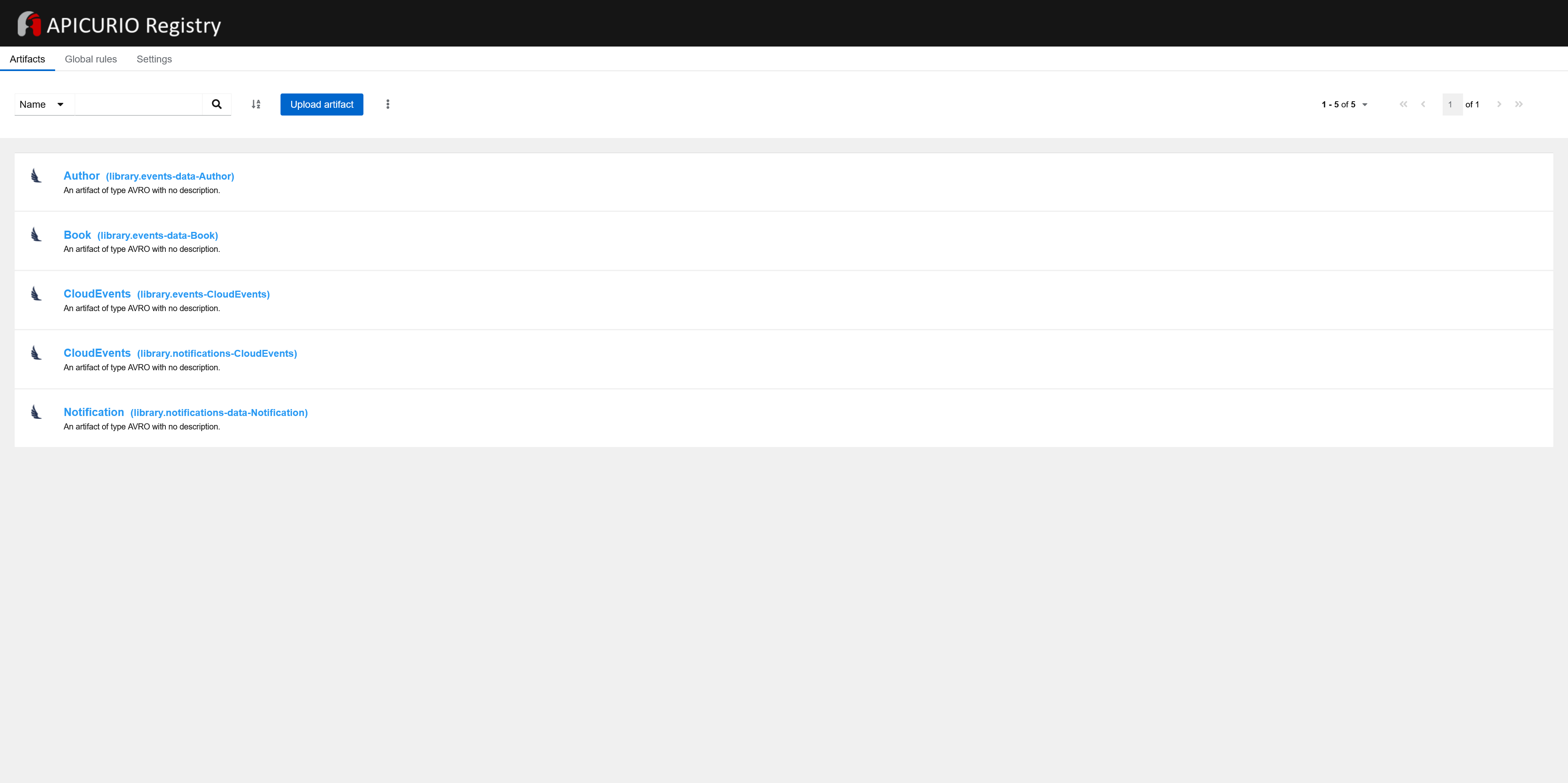

schema registry (Apicurio Registry)

-

reverse proxy (Caddy web server)

-

tools for monitoring: Kafka UI and pgAdmin

compose.yaml and

compose.override.yaml files contain all the listed components.

The project is deployed to a cloud.

How does this architecture work? The following is a sequence of steps that the platform performs after the user has initiated a change in the library data, for example, in a book data, using the library’s REST API; eventually the message about that change will be delivered to the user’s Gmail inbox or to admin HTML page through WebSocket (depending on the settings):

-

The user makes a change in a book data, for example, changes publication year of the book; for that, he performs REST API call

-

book-serviceprocesses a request to its REST API by doing the following inside one transaction:-

stores the book update (

book.booktable) -

inserts an outbox message containing an event of type

BookChangedand its data into thebook.outboxtable

-

-

book.sourceDebezium connector does the following:-

reads the new message from the

book.outboxtable -

converts it to CloudEvents format

-

converts it to Avro format

-

publishes it to a Kafka topic

-

-

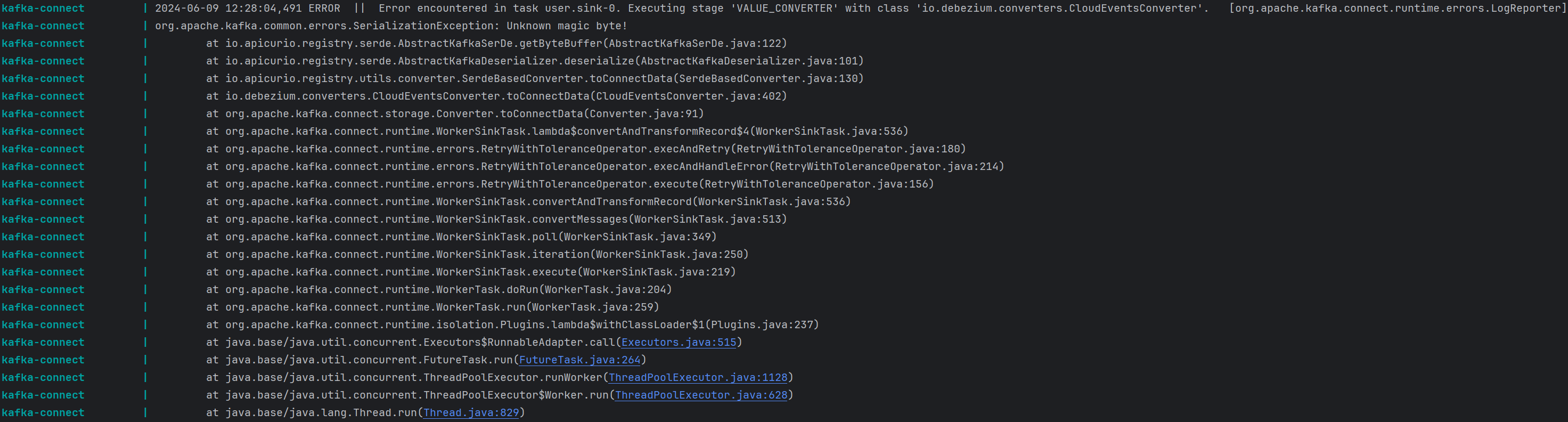

user.sinkDebezium connector does the following:-

reads the message from a Kafka topic

-

deserializes it from Avro format

-

extracts

id,source,type, anddatafrom the CloudEvent and stores them in theuser.inboxtable

-

-

user-servicedoes the following:-

each 5 seconds a task polls the

user.inboxtable for new messages -

the task marks the inbox message to be processed by the current instance of the service

-

starts processing the inbox message of

BookChangedtype in a new virtual thread

If the book is taken by a user, the service:

-

obtains a delta between the latest and the previous states of the book

-

generates a notification for the user about the book change; the message includes the delta obtained above

-

inserts an outbox message containing a command of type

SendNotificationCommandand its data directly into the WAL (unlike tobook-servicewhich inserts messages into itsoutboxtable) -

marks the inbox message as processed

-

-

user.sourceDebezium connector does the following:-

reads the new message from the WAL

-

converts it to CloudEvents format

-

converts it to Avro format

-

publishes it to a Kafka topic

-

-

notification.sinkDebezium connector does the following:-

reads the message from a Kafka topic

-

deserializes it from Avro format

-

extracts

id,source,type, anddatafrom the CloudEvent and stores them in thenotification.inboxtable

-

-

notification-servicedoes the following:-

each 5 seconds a task polls the

notification.inboxtable for new messages -

the task marks the inbox message to be processed by the current instance of the service

-

starts processing the inbox message of

SendNotificationCommandtype in a new virtual thread -

takes a user’s email and the notification text from the message and sends an email or, in a non-testing environment, a notification to an HTML page through WebSocket

-

marks the inbox message as processed

-

This and other use cases will be demonstrated in the Testing section.

Event-driven architecture implies that event producers and event consumers are decoupled and all interactions between microservices are asynchronous. Further, if you use

Transactional outbox and Inbox patterns, you should provide Message relay — a component that sends the messages stored in the outbox to a message broker (NATS, RabbitMQ,

Kafka, etc.) or reads messages from a message broker and stores them in inbox. Message relay can be implemented in different ways, for example, it can be a component of an

application; for Transactional outbox pattern, it will obtain new messages by periodic polling the outbox table. In this project, though, as you have seen above, message

relays are implemented with Kafka Connect and Debezium source/sink connectors. The above leads to the following feature of the described architecture: the microservices

don’t communicate directly neither with each other, nor with a message broker (in this project, it is Kafka). To communicate with each other, services interact only with

their own databases. As you can see in the diagram above, passing a message between two services, that is from an outbox table of a database of one service to an

inbox table of a database of another service, is carried out only by infrastructure components (PostgreSQL, Kafka Connect, Debezium connectors, and Kafka).

In total, the project includes two examples of Transactional outbox pattern (book-service and user-service and their corresponding Debezium source connectors; they differ

in that book-service stores messages in its outbox table while user-service stores messages directly in the WAL) and three examples of Inbox pattern (all three

services and their corresponding Debezium sink connectors; all examples are implemented the same).

In this project, the messages produced by all source connectors (that is messages for interaction between services and for data replication) are delivered exactly-once. But exactly-once message delivery only applies to source connectors, so on the sink side I use upsert semantics that means that even if a message duplicate occurs, a microservice won’t process the same message more than once (that is exactly-one processing). More details on this in the Connectors configuration section.

The whole chain of interactions between microservices — book-service → user-service → notification-service (through PostgreSQL, Kafka Connect with Debezium connectors,

and Kafka) — is a choreography-based implementation of Saga pattern.

The messages through which microservices interact with each other are in CloudEvents (specification for describing event data in a common way) format and are serialized/deserialized using Avro, compact and efficient binary format.

The following table summarizes the stack of technologies used in the project:

| Name | Type |

|---|---|

Microservices |

|

Programming language |

|

Application framework |

|

JDK to compile native executables |

|

ORM |

|

Build tool |

|

Tool to create OCI image of an application |

|

Infrastructure |

|

Database |

|

Event streaming platform |

|

Framework for connecting Kafka with external systems |

|

CDC platform |

|

Specification for describing event data in a common way |

|

Data serialization format |

|

Schema registry |

|

Reverse proxy |

|

Containerization platform |

|

Tool for defining and running multi-container applications |

|

Monitoring |

|

UI for Apache Kafka (Kafka UI) |

Monitoring tool for Kafka |

Administration platform for PostgreSQL |

|

Of course, none of the technologies above are mandatory for the implementation of event-driven architecture and its patterns (in the considered project, this is Transactional outbox, Inbox, and Saga). For example, you can use any non-Java stack to implement microservices, and you don’t have to use Kafka as a message broker. The same applies to any tool listed above since there are alternatives for each of them.

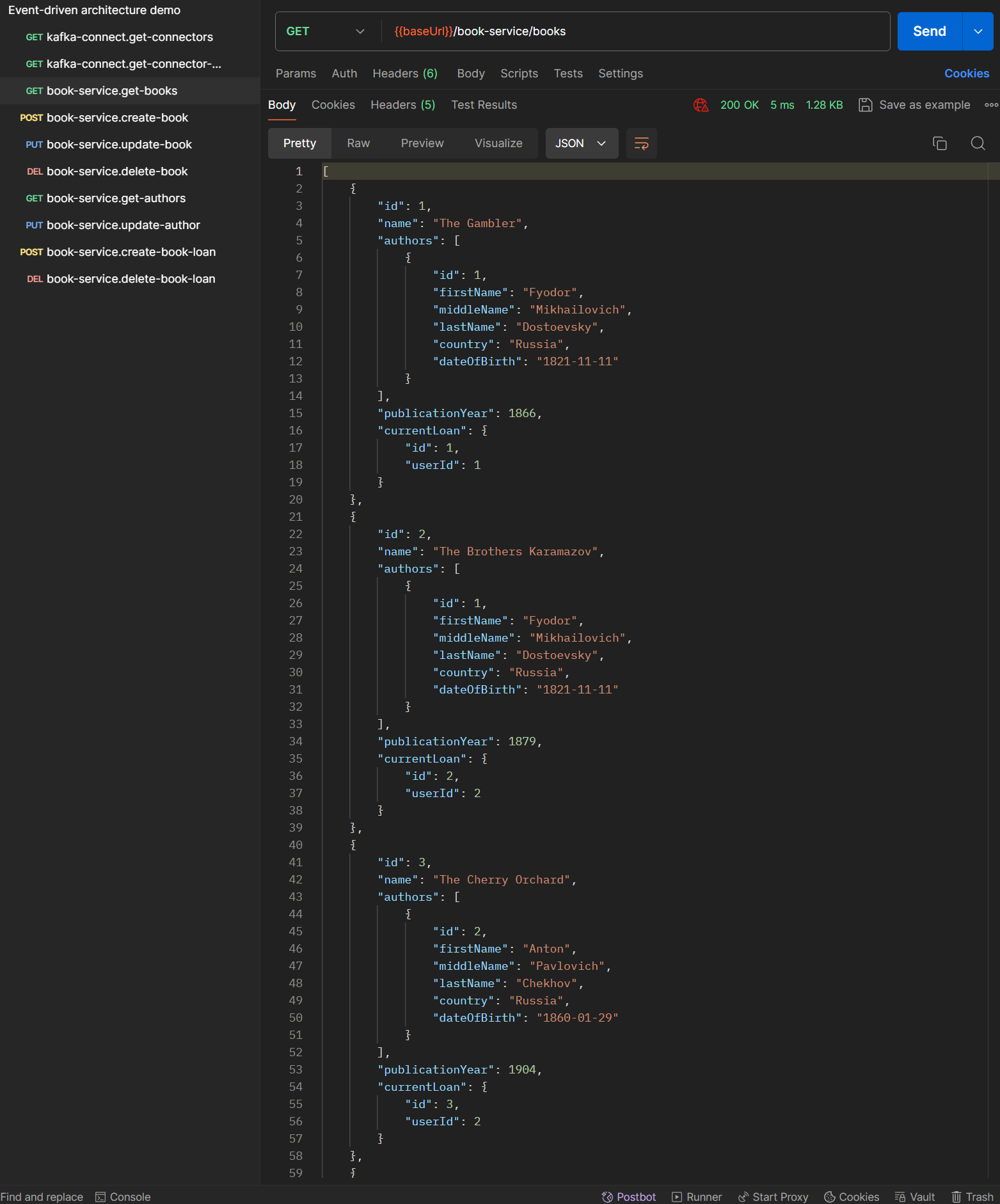

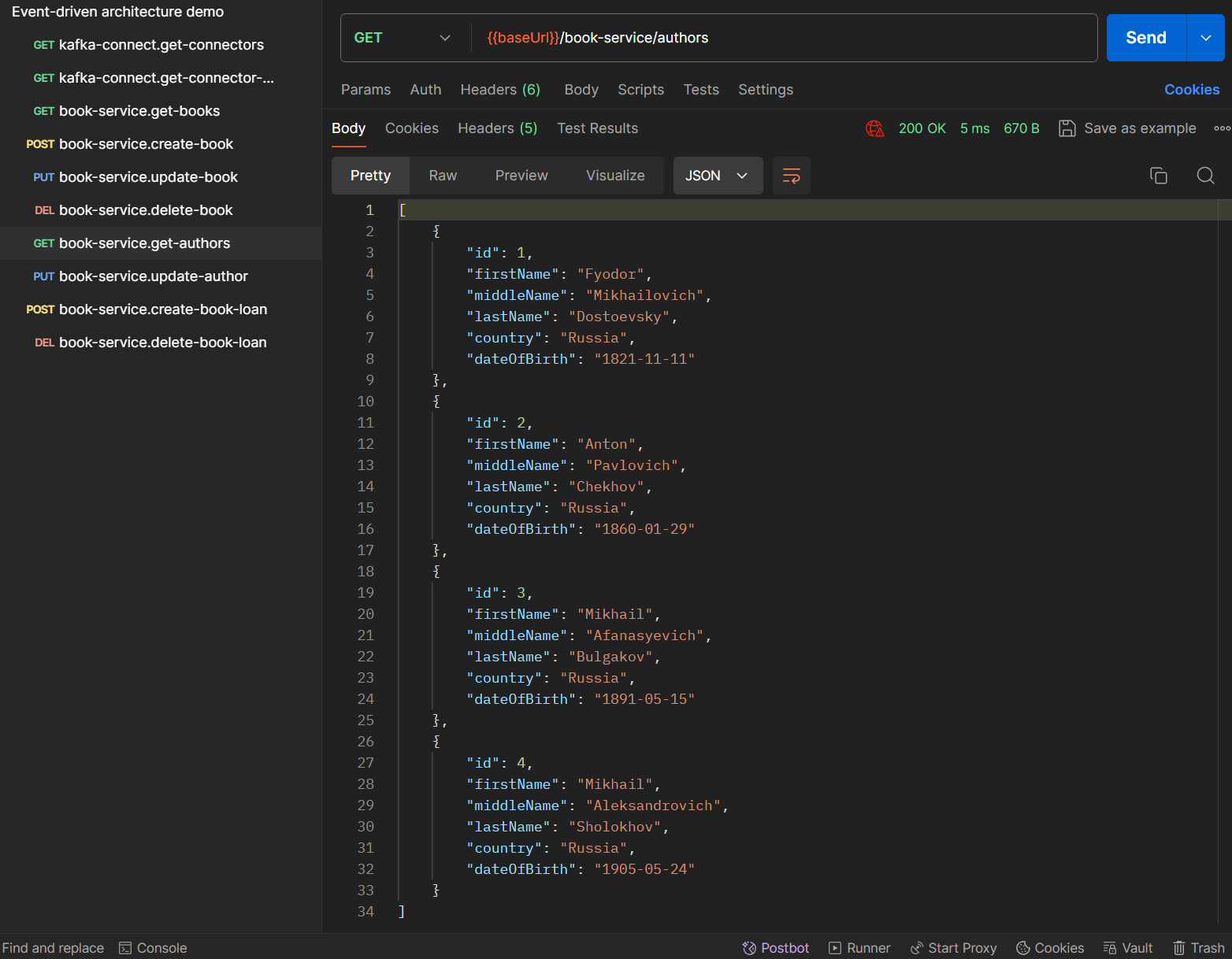

Key domain entities

of the project are Book, Author, BookLoan, and Notification. The library began its work recently, so there are only

a few books in

it. REST API of book-service allows to make CRUD operations on Book and Author entities and to lend/return a book by a user of the library.

I structured the article as follows:

-

implementation of all three microservices

-

setting up of the infrastructure components

The core part is the setting up of Kafka Connect and Debezium connectors.

-

local launch and CI/CD

-

testing

You can read the article in any order, for example, first read about a microservice and then how to set up a connector to that microservice’s database. If you are not a Java/Kotlin developer, you may be more interested in sections covering the setting up of the infrastructure components, specifically, data streaming pipelines; nevertheless, the principles of implementation of the architectural patterns under consideration are the same for applications in different programming languages. Theory will go hand in hand with practice.

Microservices implementation

All three microservices are Spring Boot native applications, use the same stack of technologies, and implement Transactional outbox and Inbox patterns

(only notification-service doesn’t implement Transactional outbox pattern). As said earlier, no one microservice interacts with Kafka; they just put

messages in outbox table or process messages from inbox table. Database change management (that is tables creation and population them with business

data) is performed with Flyway.

Versions of plugins and dependencies used by the services are specified in

gradle.properties file. bootBuildImage Gradle task

of each service is set up to produce a Docker image containing GraalVM native image of an application.

Common model

common-model module unsurprisingly contains the code that is shared by all

three microservices, specifically:

-

You can think of data model classes as a contract for microservices interaction.

Book,Author,BookLoan,CurrentAndPreviousStatemodels are shared betweenbook-serviceanduser-service.CurrentAndPreviousStatestructure is needed when some entity (a book or an author) is updated so the receiving service has different options for how to process that data depending on the use case. In this project, the service determines a delta between current and previous states of an entity and includes that delta to a message that will be sent to a user (messagefield ofNotificationmodel). But instead of obtaining the delta, if a use case requires only the latest state, a service can use only the current state of entity thanks to that universal structure.Notificationmodel is shared betweenuser-serviceandnotification-service. -

AggregateTypeenumeration contains types of entities that can be published in the system.EventTypeenumeration contains all event types that can occur in the system; each microservice uses a limited set of event types. -

runtime hints for Spring Boot framework

You need the runtime hints only if you compile your application to a native image. They provide additional reflection data needed to serialize and deserialize data models at runtime, for example, when a service stores a message in

outboxtable or process a message frominboxtable. This will be discussed in more detail in the Build section.

Book service

This section covers:

-

the basics on how all microservices in this project are implemented

-

implementation of Transactional outbox pattern on the microservice side, specifically, how to store new messages in

outboxtable -

a scenario of usage of replicated data, that is, the data from a database of another microservice

-

an example of Saga pattern with a compensating transaction

-

generation of a microservice’s REST API layer (specifically, controllers and DTOs) with OpenAPI Generator Gradle Plugin

The service stores data about all books and their authors, allows to access and manage that data by its REST API and allows a user to borrow

and return a book. When some domain event occurs (for example, a new book was added to the library or a book was lent by a user), the service

stores an appropriate message in its outbox table implementing thus the first part of Transactional outbox pattern. The second part of the

pattern implementation is to deliver

the message from a microservice’s database to Kafka; it is done using Kafka Connect and Debezium and is discussed in the Connectors configuration section.

The main class looks like this:

@SpringBootApplication

@ImportRuntimeHints(CommonRuntimeHints::class)

@EnableScheduling

class BookServiceApplication

fun main(args: Array<String>) {

runApplication<BookServiceApplication>(*args)

}@ImportRuntimeHints tells the service that additional reflection data should be used by importing it from common-model module.

@EnableScheduling is needed to enable execution of scheduled tasks; this service, as the other two, polls periodically inbox table of its

database for the new messages. This is the part of Inbox pattern implementation that is covered in detail in the User service section.

REST API is described in

OpenAPI format. openApiGenerate task of org.openapi.generator Gradle plugin is configured as shown in the

build script and produces controllers, DTOs,

and delegate interfaces. You need to implement the delegate interfaces to specify how to process incoming requests. This project contains

implementations of delegates for:

-

books REST API:

-

This is a default implementation.

-

This is a limited implementation that permits only read and update operations on a book (that is doesn’t permit create and delete operations). It is used on testing environment (when

testprofile is active).

-

-

authors REST API:

AuthorsApiDelegateImpl

A generated controller calls an implementation (or one of implementations) of an appropriate delegate interface to process an incoming request.

The config for testing environment also enables limited converters for books and authors; that means that a user is allowed to change fewer fields compared to default converters. Also, the values for these fields will be coerced to lie in a given range, for example, when updating a book, a user is allowed to change only its publication year and the value of the field will lie in a range between 1800 and 1950 years even if the user entered a value not in that range.

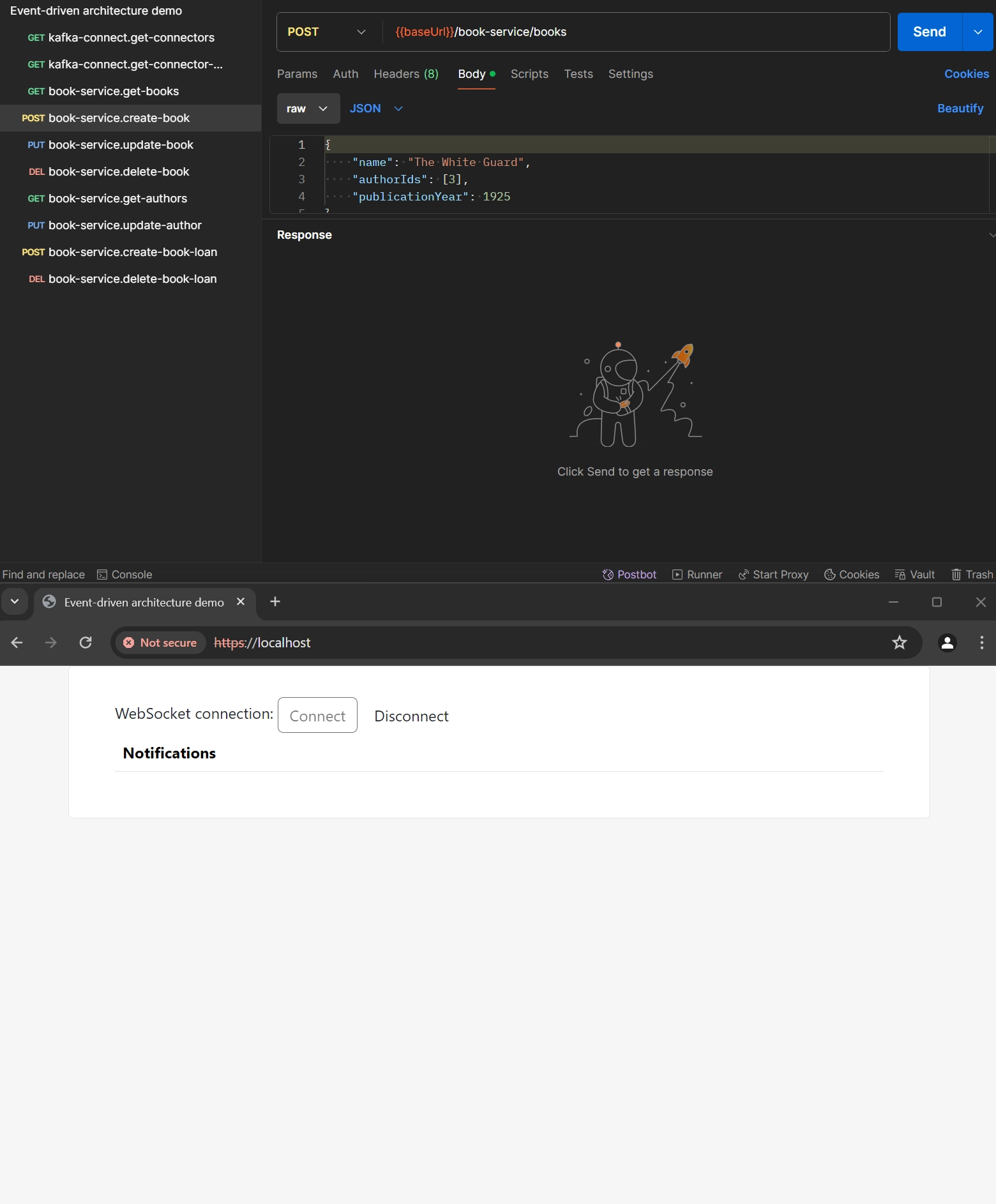

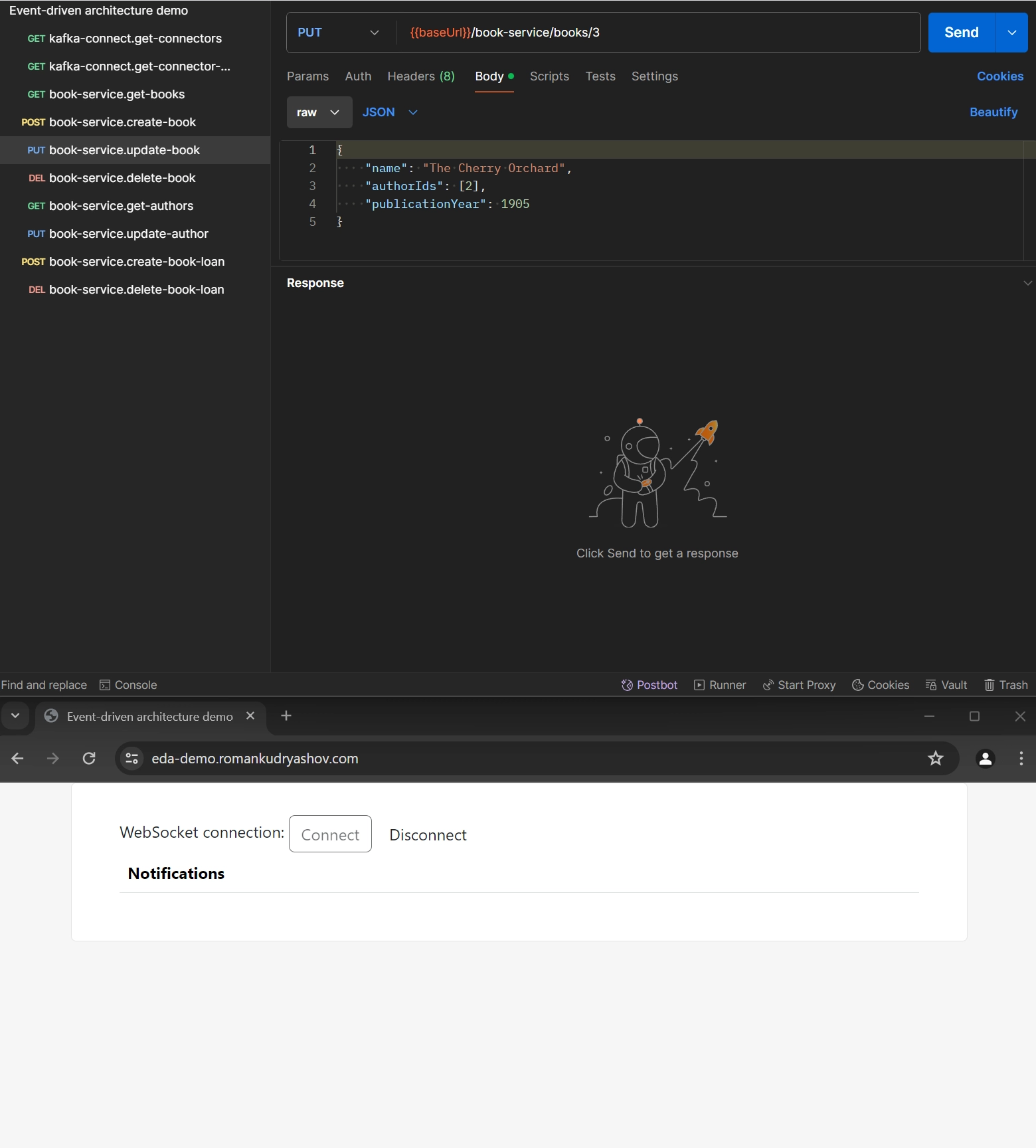

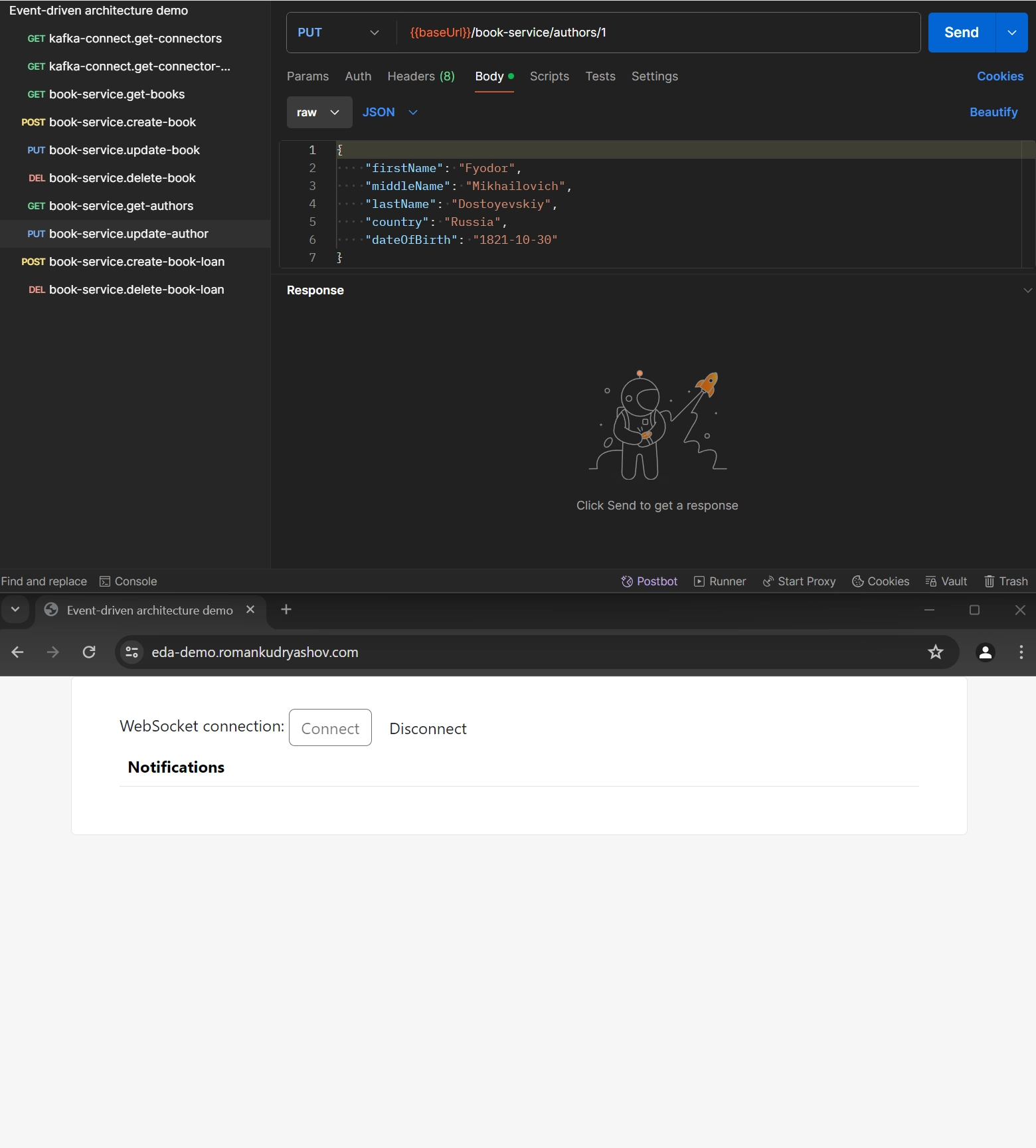

In the introduction, I described the high-level algorithm of what book-service (as a part of a whole system) does when it processes a request to its REST API. Now we

will look at what the service does in detail, including how the Transactional outbox pattern is implemented on the microservice side. Again, we consider an update of a book

scenario; it is initiated by a call of PUT {{baseUrl}}/book-service/books/{bookId} REST API method. You can start the process locally by calling

PUT https://localhost/book-service/books/3 and passing the following request body:

{

"name": "The Cherry Orchard",

"authorIds": [2],

"publicationYear": 1905

}both the considered implementations of BooksApiDelegate interface call

BookService.update() method

which opens a new transaction:

BookService.update() method@Transactional(propagation = Propagation.REQUIRES_NEW, rollbackFor = [Exception::class])

override fun update(id: Long, book: BookToSave): BookDto {

log.debug("Start updating a book: id={}, new state={}", id, book)

val existingBook = getBookEntityById(id) ?: throw NotFoundException("Book", id)

val existingBookModel = bookEntityToModelConverter.convert(existingBook)

val bookToUpdate = bookToSaveToEntityConverter.convert(Pair(book, existingBook))

val updatedBook = bookRepository.save(bookToUpdate)

val updatedBookModel = bookEntityToModelConverter.convert(updatedBook)

outboxMessageService.saveBookChangedEventMessage(CurrentAndPreviousState(updatedBookModel, existingBookModel))

return bookEntityToDtoConverter.convert(updatedBook)

}As said in the introduction, the following two actions are performed inside the transaction:

-

storing an updated book

-

an attempt to find an existed book by id from the request

-

if the book was found, its is updated with the new values of the fields coming from the request (the conversion is done in

BookToSaveToEntityConverterorBookToSaveToEntityLimitedConverter, depending on a Spring profile used) -

the updated book is stored in

booktable throughBookRepository

-

-

storing a new outbox message

This step is a necessary part of implementation of the Transactional outbox pattern that solves dual problem: instead of writing the message to a different system (that is to a message broker), the service stores in the same transaction in the

outboxtable. The step includes the following:-

conversion of the updated book entity (an internal state of a book) to a model (an external state of a book that is intended for interservice communication)

-

call of

OutboxMessageService.saveBookChangedEventMessage()method and passingCurrentAndPreviousStatedata model to it

-

The first action is quite common; let’s consider the second. saveBookChangedEventMessage does the following:

OutboxMessageService.saveBookChangedEventMessage() methodoverride fun saveBookChangedEventMessage(payload: CurrentAndPreviousState<Book>) =

save(createOutboxMessage(AggregateType.Book, payload.current.id, BookChanged, payload))At first, the saveBookChangedEventMessage method creates an instance of OutboxMessageEntity:

OutboxMessageEntity@Entity

@Table(name = "outbox")

class OutboxMessageEntity(

@Id

@Generated

@ColumnDefault("gen_random_uuid()")

val id: UUID? = null,

@Enumerated(value = EnumType.STRING)

val aggregateType: AggregateType,

val aggregateId: Long,

@Enumerated(value = EnumType.STRING)

val type: EventType,

@JdbcTypeCode(SqlTypes.JSON)

val payload: JsonNode

) : AbstractEntity()by using the following method:

OutboxMessageEntityprivate fun <T> createOutboxMessage(aggregateType: AggregateType, aggregateId: Long, type: EventType, payload: T) = OutboxMessageEntity(

aggregateType = aggregateType,

aggregateId = aggregateId,

type = type,

payload = objectMapper.convertValue(payload, JsonNode::class.java)

)-

aggregateTypeisBook -

aggregateIdis id of the changed book; the source connector will use it as key of an emitted Kafka message -

typeisBookChanged -

payloadis an instance of theCurrentAndPreviousStatedata model

BookChanged is one of event

types

in this project. There are two main types of messages in event-driven architectures:

-

events notify that something has changed in a given domain or aggregate.

-

commands are orders to perform a given action. Examples of commands usage will be in the User service section.

In different sources, in addition to the above, you can find also the following message types:

-

queries are messages, or requests, sent to a component to retrieve some information from it.

-

replies are responses to queries.

-

documents are much like events; the service publishes them when a given entity changes, but they contain all the entity’s information, unlike events which typically only have the information related to the change that originated the event

According to this classification, events in this project are documents because they contain the latest state (and some can also contain a previous state of an entity), but I decided to leave familiar naming and call them events.

In the considered scenario, the payload field has the type of the mentioned

CurrentAndPreviousState

model containing both new and old states of the book; using it, any consumer can process the message in any way. For example, one consumer can be only interested in the

latest state of a book, while another needs to receive the delta between the new and the old states of the book. In this project, the only consumer of

BookChanged type of event is user-service that needs the delta to notify a user about changes in the library but still I preferred

more flexible data model which may be suitable for consumers with other needs in the future. Such an approach also allows to use just one

message type (BookChanged) to describe any change in a book instead of multiple events (BookPublicationYearChanged,

BookNameChanged, etc.); but such a structure doesn’t give information about what change triggered the event, unless we specifically add it

to the payload. It should also be noted that such messages need more storage space for Kafka. Do your own research to find out which message

type is best suited for your system: events or documents.

All the fields used in OutboxMessageEntity constructor above except the type field are required by

Outbox Event Router single message transformation; the

type field is required by CloudEvents converter. This will be covered

in the Connectors configuration section. All the fields are mapped to the appropriate columns of the outbox table:

outbox tablecreate table outbox(

id uuid primary key default gen_random_uuid(),

aggregate_type varchar not null,

aggregate_id varchar not null,

type varchar not null,

payload jsonb not null,

created_at timestamptz not null default current_timestamp,

updated_at timestamptz not null default current_timestamp

);The save method stores OutboxMessageEntity through OutboxMessageRepository

in the outbox table:

OutboxMessageEntityprivate fun save(outboxMessage: OutboxMessageEntity) {

log.debug("Start saving an outbox message: {}", outboxMessage)

outboxMessageRepository.save(outboxMessage)

outboxMessageRepository.deleteById(outboxMessage.id!!)

}As you see above, the outbox message is deleted right after its creation. This might be surprising at first, but it makes sense when remembering how log-based CDC

works: it doesn’t examine the actual contents of the table in the database, but instead it tails the append-only transaction log (the WAL). The calls to save() and

deleteById() will create one INSERT and one DELETE entries in the log once the transaction commits. After that, Debezium will process these events: for any INSERT,

a message with the event’s payload will be sent to Apache Kafka; DELETE events, on the other hand, can be ignored because they don’t require any propagation to the

message broker. So we can capture the message added to the outbox table by means of CDC, but when looking at the contents of the table itself, it will always be empty.

This means that no additional disk space is needed for the table and also no separate house-keeping process is required to stop it from growing indefinitely.

In the next section, we will look at another implementation of the Transactional outbox pattern, which stores outbox directly in the WAL, that is, without using the outbox table. We will also look at the advantages and disadvantages of each implementation.

Further, the transaction is committed and the following happens:

-

the controller returns to a user the updated state of the book as the body of the REST response.

-

the new outbox message is passed to the database of the

user-servicethroughbook.sourceanduser.sinkconnectors (we will discuss that in the Connectors configuration section).

The service also is an example of a consumer of data replicated from a database of another service. The database of book-service contains user_replica table with

just two columns:

user_replica tablecreate table user_replica(

id bigint primary key,

status varchar not null

);The source table, library_user of the database of user-service,

has more fields

but in the source connector we specify

that only id and status fields will be included to change event record values. Then the record will be published to the streaming.users Kafka topic which is

listened by the sink connector; it will

insert the records into the user_replica table.

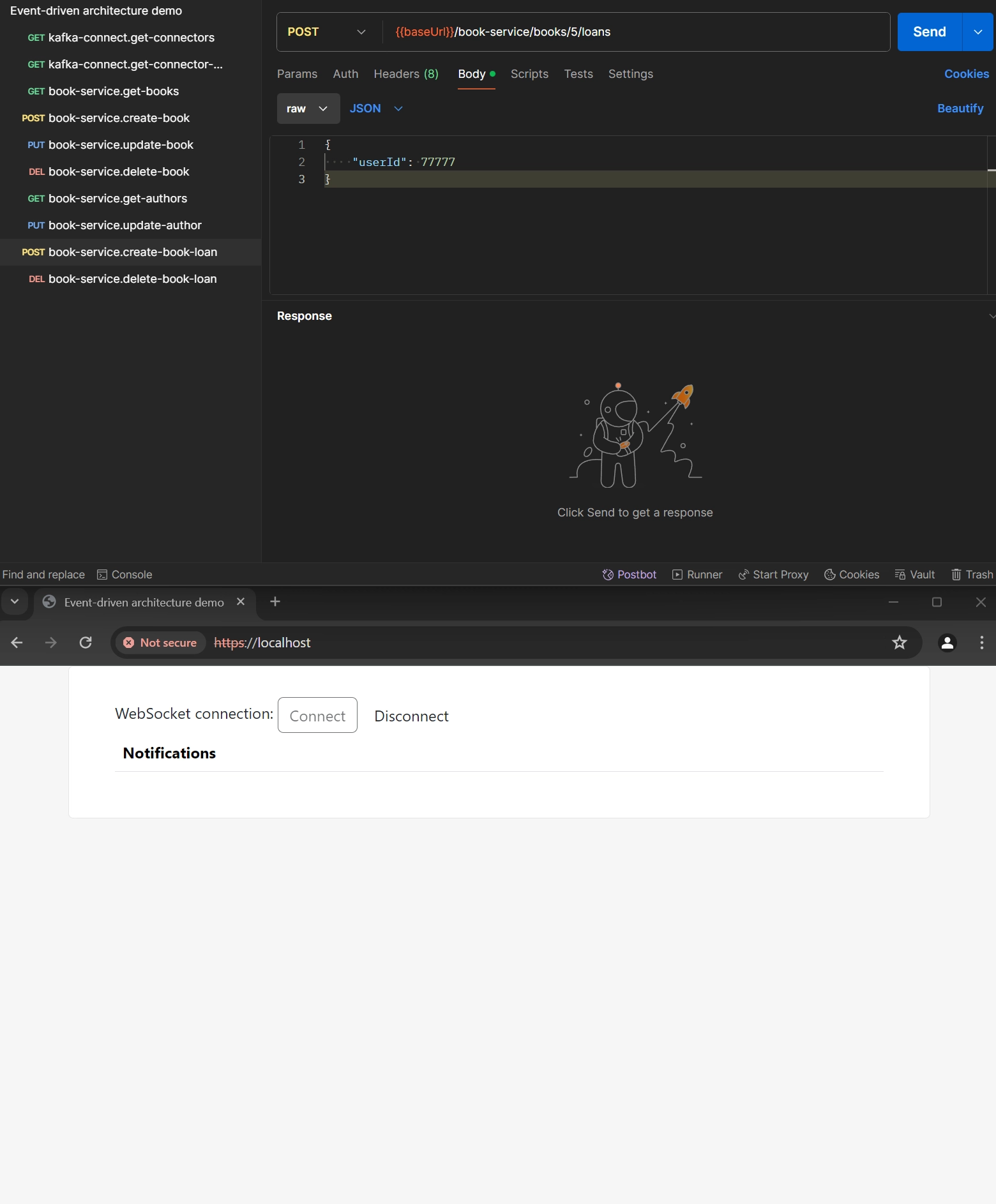

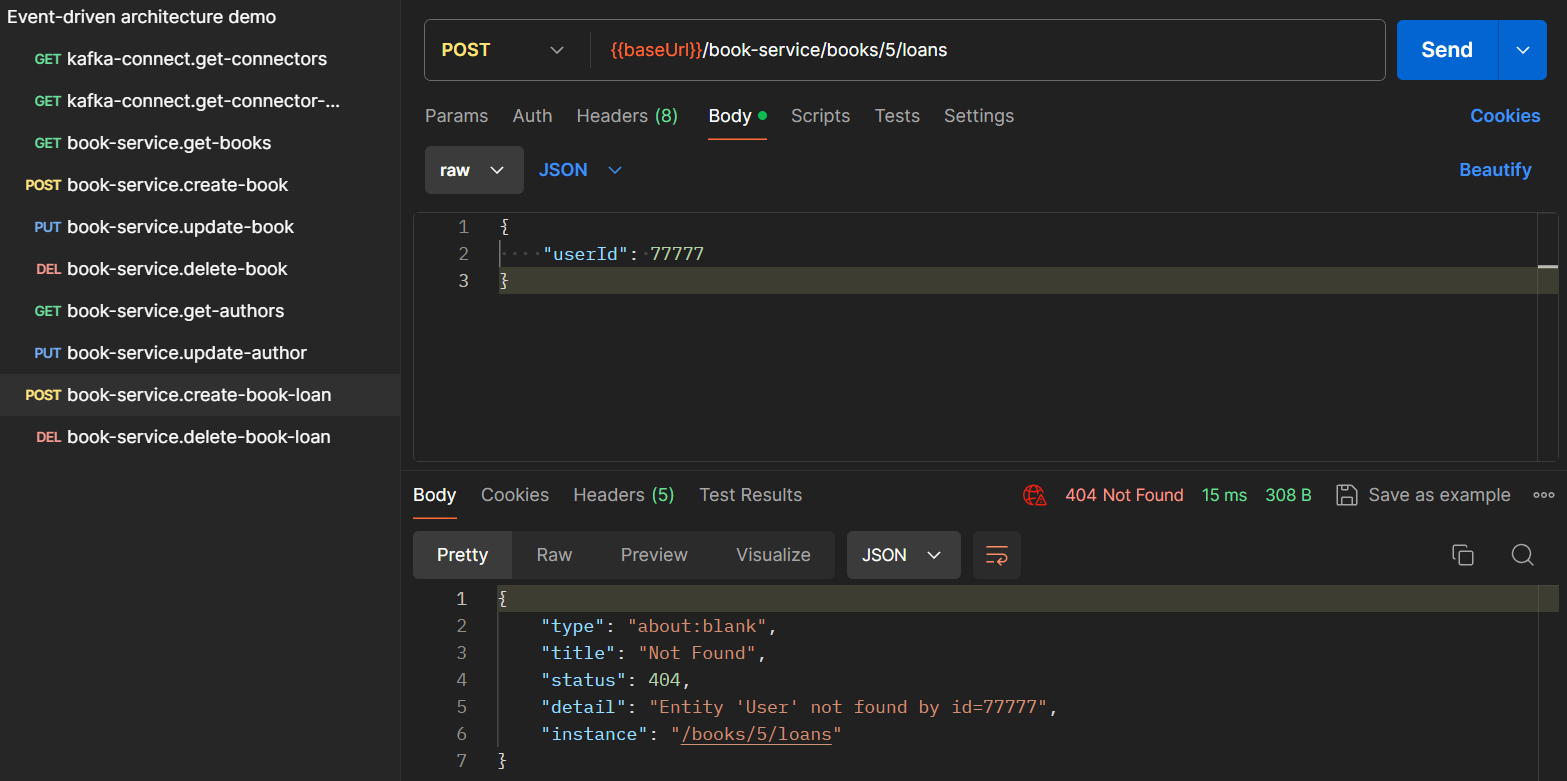

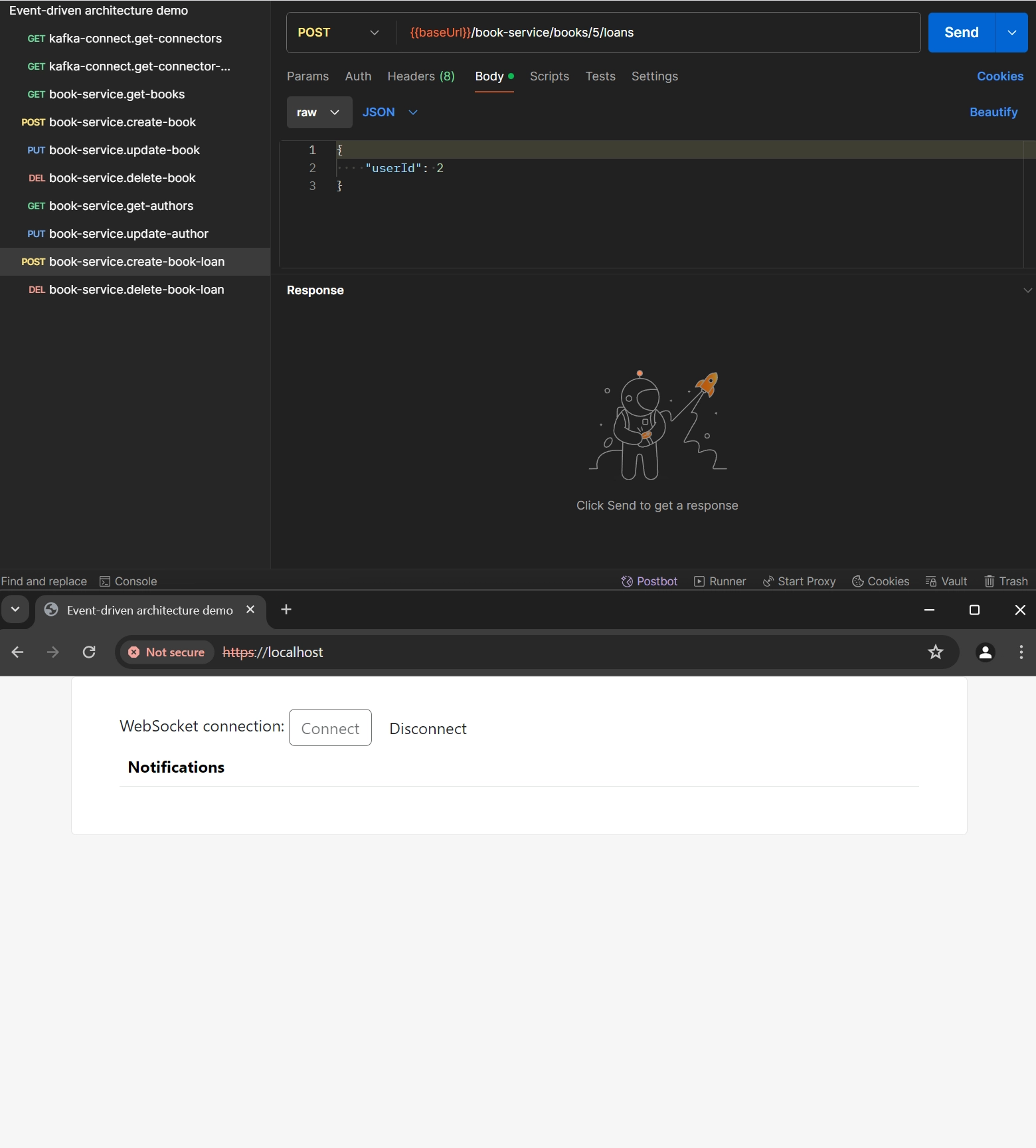

book-service can use the data from the table in a scenario when a user take a book from the library. To lend a book, it is needed to perform

POST {{baseUrl}}/book-service/books/{bookId}/loans request (for example, POST https://localhost/book-service/books/5/loans with the following body:

{

"userId": 2

}The method to borrow a book acts similar to the method of a book update: it starts a new transaction that includes a change of a business data and insertion of an appropriate outbox message:

BookService.lendBook() method@Transactional(propagation = Propagation.REQUIRES_NEW, rollbackFor = [Exception::class])

override fun lendBook(bookId: Long, bookLoan: BookLoanToSave): BookLoanDto {

log.debug("Start lending a book: bookId={}, bookLoan={}", bookId, bookLoan)

val bookToLend = getBookEntityById(bookId) ?: throw NotFoundException("Book", bookId)

if (bookToLend.currentLoan() != null) throw BookServiceException("The book with id=$bookId is already borrowed by a user")

if (useStreamingDataToCheckUser) {

if (userReplicaService.getById(bookLoan.userId) == null) {

throw NotFoundException("User", bookLoan.userId)

}

}

val bookLoanToCreate = bookLoanToSaveToEntityConverter.convert(Pair(bookLoan, bookToLend))

bookToLend.loans.add(bookLoanToCreate)

bookRepository.save(bookToLend)

val lentBookModel = bookEntityToModelConverter.convert(bookToLend)

outboxMessageService.saveBookLentEventMessage(lentBookModel)

return bookLoanEntityToDtoConverter.convert(bookToLend.currentLoan()!!)

}If you set user.check.use-streaming-data: true in the service’s

config,

then useStreamingDataToCheckUser is true which tells the method to use streaming data to check a user before creation of a book

loan: the method tries to find an

active

user with the specified id. If it is successful, the method changes business data (that is, it inserts into the database

BookLoan entity

with specified the book and the user) and stores an outbox message of BookLent type. Otherwise, the method throws an exception and returns it to a user in a

response body.

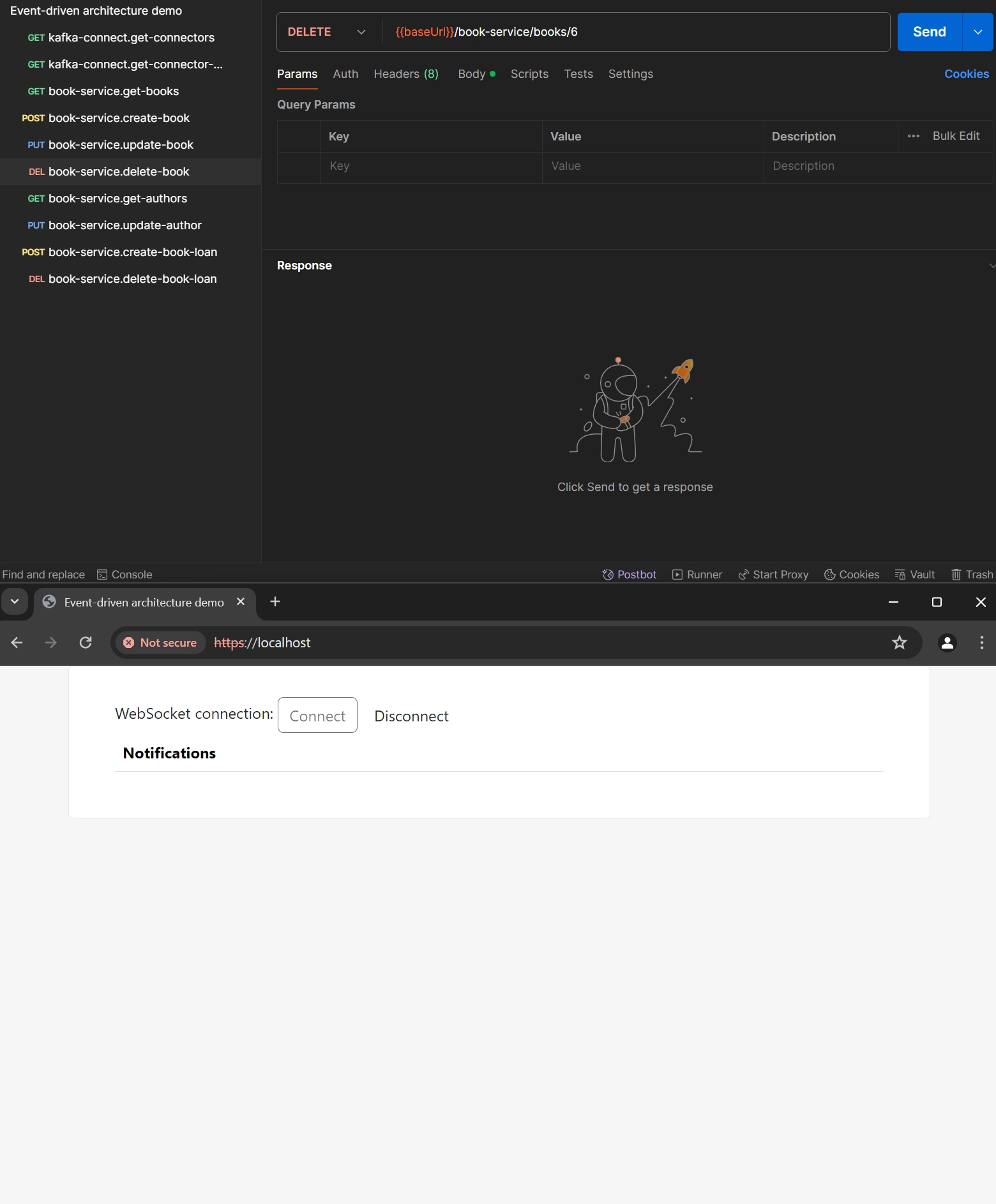

If you set user.check.use-streaming-data: false, the scenario differs from the one discussed above only in that the lendBook() method

skips checking that the specified user exists and is active despite the fact that the user_replica table still exists, that is, this

simulates the case where you have not configured replication of the user data table. However, the further continuation of the saga may

differ because now we rely on the user verification to happen in user-service. If we use the request body above with "userId": 2,

everything is fine, and the saga continues in the next service, notification-service. But if we use in the request body certainly not

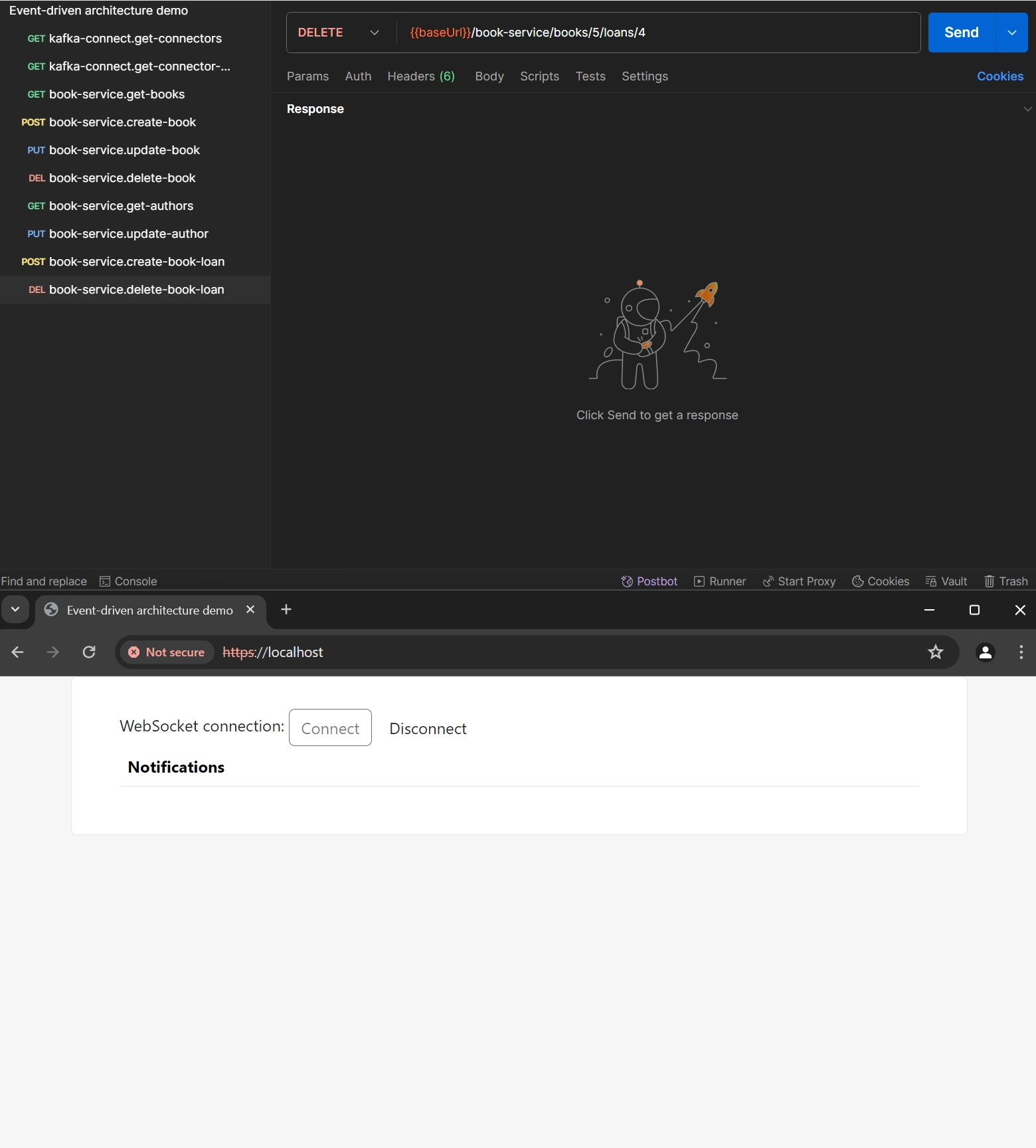

existing in the database userId (for example, 502), the user-service receives a message of BookLent type containing userId value

that doesn’t exist in its library_user table; therefore, user-service publishes a message of RollbackBookLentCommand type which will

mean that book-service should perform a compensating transaction that is to rollback somehow the creation of a BookLoan entity. The

message eventually occurs in inbox table of the database of book-service through

user.source and

book.sink connectors and

is processed

using Inbox pattern. When processing the message, the

cancelBookLoan()

is called:

BookService.cancelBookLoan() method@Transactional(propagation = Propagation.REQUIRES_NEW, rollbackFor = [Exception::class])

override fun cancelBookLoan(bookId: Long, bookLoanId: Long) {

log.debug("Start cancelling book loan for a book: bookId={}, bookLoanId={}", bookId, bookLoanId)

val bookToCancelLoan = getBookEntityById(bookId) ?: throw NotFoundException("Book", bookId)

val modelOfBookToCancelLoan = bookEntityToModelConverter.convert(bookToCancelLoan)

val bookLoanToCancel = bookToCancelLoan.loans.find { it.id == bookLoanId } ?: throw NotFoundException("BookLoan", bookLoanId)

val currentLoan = bookToCancelLoan.currentLoan()

if (currentLoan == null || bookLoanToCancel.id != currentLoan.id) throw BookServiceException("BookLoan with id=$bookLoanId can't be canceled")

bookLoanToCancel.status = BookLoanEntity.Status.Canceled

bookRepository.save(bookToCancelLoan)

outboxMessageService.saveBookLoanCanceledEventMessage(modelOfBookToCancelLoan)

}The method sets the status of the

BookLoan entity

to Canceled. After that, it publishes a message of BookLoanCanceled type that user-service will process as well as one of BookLent type.

cancelBookLoan() method differs

from other methods of BookService in that it is initiated by processing of an inbox message (not by request to REST API of the book-service). RollbackBookLentCommand type is the only inbox message type processed by

book-service.

You will find more details about transaction management when implementing the Inbox pattern in the User service section.

user-service processes all messages published by book-service and we will consider the implementation of Inbox pattern in more detail on

its example (see the User service section), specifically, using messages of the mentioned BookChanged, BookLent, and BookLoanCanceled types.

The following is a combined table containing a

full list

of message types produced by the book-service and a

full list of REST API methods of the service

(Initiated by REST API method table column):

| Message type | Type of message payload | Message meaning | Initiated by REST API method (or type of inbox message) |

|---|---|---|---|

|

|

A book was created |

|

|

|

Data of a book was changed |

|

|

|

A book was deleted |

|

- |

- |

Returns list of all books |

|

|

|

A book was lent to a specified user |

|

|

|

A book loan was cancelled |

( |

|

|

A user returned a book to the library |

|

|

|

Data of an author was changed |

|

- |

- |

Returns list of all authors |

|

Because of, as mentioned above, the service implements Inbox pattern, it would be possible to put all commands for a change in

the library initiated by methods of the REST API to the inbox table for further processing. That would allow to return the response

to a user almost immediately; the response would contain the information that the service accepted the request and will process it soon.

However, it is unknown whether the processing will complete successfully or will fail, so I decided to make the service respond to a

user after committing the changes (updated business data with an appropriate outbox event) to the database. The only event type

processed

by book-service is RollbackBookLentCommand (it is published by user-service).

User service

This section covers:

-

implementation of Inbox pattern on the microservice side, specifically, how to process new messages from

inboxtable -

implementation of Transactional outbox pattern on the microservice side based on direct writes to the WAL and comparison of it with the approach that uses the outbox table

-

some aspects of error handling and transaction management when implementing the Transactional outbox and Inbox patterns

-

how to publish a compensating transaction

-

creation of notifications with custom CSS using

kotlinx-htmllibrary

This service stores information about users of the library. It also processes all the messages from inbox table (the messages are published

by book-service (see the previous section)) implementing thus the second part of Inbox pattern. The first part of the pattern implementation

is to deliver the message from

Kafka to a microservice’s database; it is done using Kafka Connect and Debezium and is discussed in the Connectors configuration section. As a

result of message processing, the service creates commands to send appropriate notifications to the users. For example, if a user borrowed a

book and later the book’s data has changed, the user will receive a notification about that. There is also a scenario when the service shouldn’t

proceed with the message and should publish thus a compensating transaction (a message of RollbackBookLentCommand type); that was considered in the previous

section. As book-service, this service sends the messages using Transactional outbox pattern. The service’s database contains library_user table which

is the data source for user_replica table of the database of book-service.

The main class is the same as in book-service and looks like this:

@SpringBootApplication

@ImportRuntimeHints(CommonRuntimeHints::class)

@EnableScheduling

class UserServiceApplication

fun main(args: Array<String>) {

runApplication<UserServiceApplication>(*args)

}The high-level algorithm of what is done inside user-service (as a part of a whole system) when it processes an inbox message was described in the introduction.

We will now take a detailed look at what the service does, including how the Inbox pattern is implemented on the microservice side. We will continue to use an

update of a book scenario; now it will help us to consider Inbox pattern implementation.

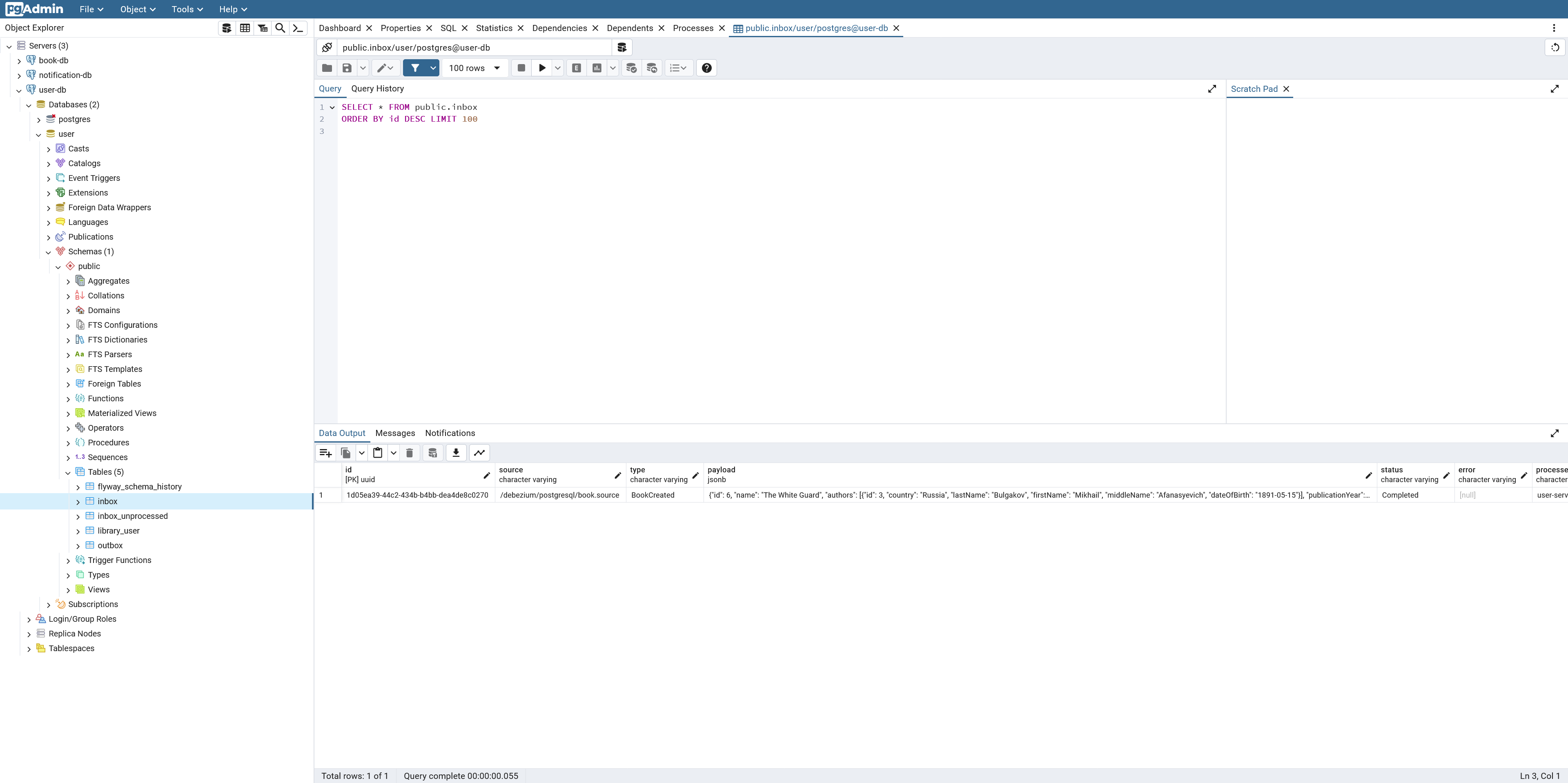

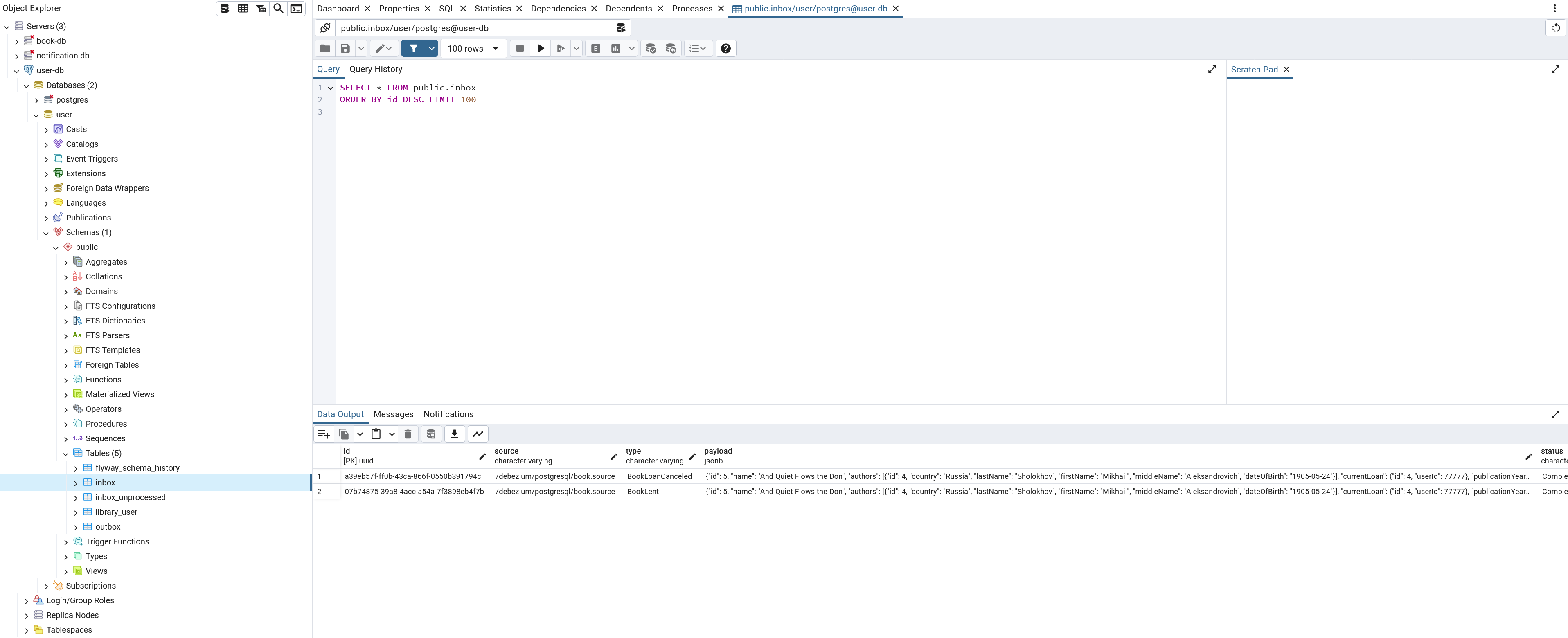

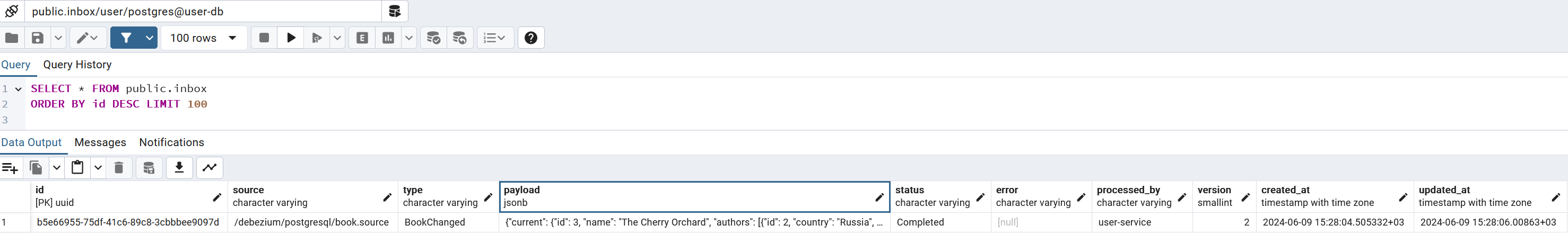

The structure of the inbox table looks like this:

inbox tablecreate table inbox(

id uuid primary key,

source varchar not null,

type varchar not null,

payload jsonb not null,

status varchar not null default 'New',

error varchar,

processed_by varchar,

version smallint not null default 0,

created_at timestamptz not null default current_timestamp,

updated_at timestamptz not null default current_timestamp

);The table is populated with the messages passing in this way: book-service →

book database → book.source

connector → Kafka → user.sink

connector → user database. As you see from the listing above, the messages in inbox table are not in CloudEvents format; instead, they have a custom

structure containing only four columns corresponding to appropriate fields of CloudEvents envelope: id, source, type, and payload (data field of the

envelope). These columns are sufficient for the user-service to process an event. The processing algorithm expects that the payload column contains a

message in JSON format. Using Hibernate, the mapping between the table and InboxMessageEntity looks as follows:

@Entity

@Table(name = "inbox")

class InboxMessageEntity(

@Id

val id: UUID,

val source: String,

@Enumerated(value = EnumType.STRING)

val type: EventType,

@JdbcTypeCode(SqlTypes.JSON)

val payload: JsonNode,

@Enumerated(value = EnumType.STRING)

var status: Status,

var error: String?,

var processedBy: String?,

@Version

val version: Int

) : AbstractEntity() {

enum class Status {

New,

ReadyForProcessing,

Completed,

Error

}

}I designed the processing of inbox messages based on the following requirements to the parallelization of the processing:

-

multiple instances of the service can process different messages independently and in the same time

-

the processing task running on each instance can process multiple messages in parallel

Obviously, we don’t want a message to be processed by more than one instance. For that, I use pessimistic write lock that ensures that the current service instance obtains an exclusive lock on each record (message) from the list to be processed and, therefore, prevents the records from being read, updated or deleted by another service instance.

Further, considering the processing on a service instance, there should be a transaction that acquires the pessimistic write lock when retrieving a batch of inbox messages to process them. If the microservice processes the messages in the same transaction, it can only process them in the sequential manner because it is technically difficult in Spring to process each message in a separate thread but inside the original transaction. Therefore, to support the parallel processing of the messages by a service instance, each message should be processed inside its own separate transaction. Based on the above, I designed the following algorithm of messages processing:

-

defining the list of messages that will be processed by the current instance of the service (marking process)

-

processing the messages intended for the current instance

If there is only one instance of our service, or we don’t need to process the messages by multiple instances, the algorithm will be much simpler,

specifically, we won’t need the first step of the algorithm as we don’t need to define which inbox messages are processed by which instances. If there

are multiple instances of our service, we can use, for example, shedlock library which prevents the task from being executed by more than one instance.

Let’s look at how these thoughts are implemented in code.

InboxProcessingTask

starts processing new messages in the inbox table at a specified interval

(5 seconds) and looks like this:

InboxProcessingTask implementation@Component

class InboxProcessingTask(

private val inboxMessageService: InboxMessageService,

private val applicationTaskExecutor: AsyncTaskExecutor,

@Value("\${inbox.processing.task.batch.size}")

private val batchSize: Int,

@Value("\${inbox.processing.task.subtask.timeout}")

private val subtaskTimeout: Long

) {

private val log = LoggerFactory.getLogger(this.javaClass)

@Scheduled(cron = "\${inbox.processing.task.cron}")

fun execute() {

log.debug("Start inbox processing task")

val newInboxMessagesCount = inboxMessageService.markInboxMessagesAsReadyForProcessingByInstance(batchSize)

log.debug("{} new inbox message(s) marked as ready for processing", newInboxMessagesCount)

val inboxMessagesToProcess = inboxMessageService.getBatchForProcessing(batchSize)

if (inboxMessagesToProcess.isNotEmpty()) {

log.debug("Start processing {} inbox message(s)", inboxMessagesToProcess.size)

val subtasks = inboxMessagesToProcess.map { inboxMessage ->

applicationTaskExecutor

.submitCompletable { inboxMessageService.process(inboxMessage) }

.orTimeout(subtaskTimeout, TimeUnit.SECONDS)

}

CompletableFuture.allOf(*subtasks.toTypedArray()).join()

}

log.debug("Inbox processing task completed")

}

}The method to mark inbox messages to be processed by the current instance receives batchSize — configuration parameter — which tells how

many new messages (ordered by created_at column’s value) in the inbox table should be marked. It looks like this:

@Transactional(propagation = Propagation.REQUIRES_NEW, noRollbackFor = [RuntimeException::class])

override fun markInboxMessagesAsReadyForProcessingByInstance(batchSize: Int): Int {

fun saveReadyForProcessing(inboxMessage: InboxMessageEntity) {

log.debug("Start saving a message ready for processing, id={}", inboxMessage.id)

if (inboxMessage.status != InboxMessageEntity.Status.New) throw UserServiceException("Inbox message with id=${inboxMessage.id} is not in 'New' status")

inboxMessage.status = InboxMessageEntity.Status.ReadyForProcessing

inboxMessage.processedBy = applicationName

inboxMessageRepository.save(inboxMessage)

}

val newInboxMessages = inboxMessageRepository.findAllByStatusOrderByCreatedAtAsc(InboxMessageEntity.Status.New, PageRequest.of(0, batchSize))

return if (newInboxMessages.isNotEmpty()) {

newInboxMessages.forEach { inboxMessage -> saveReadyForProcessing(inboxMessage) }

newInboxMessages.size

} else 0

}Eventually, each message in the batch is updated (see saveReadyForProcessing method) with the new status (ReadyForProcessing) and the name

of the instance that should process the message. The method returns the number of messages that were marked.

The messages to process are retrieved using

InboxMessageRepository

with the aforementioned pessimistic write lock:

interface InboxMessageRepository : JpaRepository<InboxMessageEntity, UUID> {

@Lock(LockModeType.PESSIMISTIC_WRITE)

fun findAllByStatusOrderByCreatedAtAsc(status: InboxMessageEntity.Status, pageable: Pageable): List<InboxMessageEntity>

...

}The lock is in effect within the transaction that wraps markInboxMessagesAsReadyForProcessingByInstance method.

Next, the algorithm retrieves messages that are intended to pe processed by the current instance using getBatchForProcessing method. The

messages are processed in parallel using the following approach:

val subtasks = inboxMessagesToProcess.map { inboxMessage ->

applicationTaskExecutor

.submitCompletable { inboxMessageService.process(inboxMessage) }

.orTimeout(subtaskTimeout, TimeUnit.SECONDS)

}

CompletableFuture.allOf(*subtasks.toTypedArray()).join()Usage of applicationTaskExecutor bean of AsyncTaskExecutor type allows to process each inbox message in a separate thread. The subtaskTimeout parameter

specifies how long to wait before completing a subtask exceptionally with a TimeoutException. The thread of the main task will wait until all subtasks finish,

successfully or exceptionally. The applicationTaskExecutor bean is constructed as follows:

applicationTaskExecutor bean@Bean(TaskExecutionAutoConfiguration.APPLICATION_TASK_EXECUTOR_BEAN_NAME)

fun asyncTaskExecutor(): AsyncTaskExecutor = TaskExecutorAdapter(Executors.newVirtualThreadPerTaskExecutor())This way we provide custom applicationTaskExecutor bean that overrides the default one; it uses JDK 21’s

Virtual Threads starting a new virtual Thread for

each task.

Each inbox message is processed as follows:

@Transactional(propagation = Propagation.REQUIRES_NEW, noRollbackFor = [RuntimeException::class])

override fun process(inboxMessage: InboxMessageEntity) {

log.debug("Start processing an inbox message with id={}", inboxMessage.id)

if (inboxMessage.status != InboxMessageEntity.Status.ReadyForProcessing)

throw UserServiceException("Inbox message with id=${inboxMessage.id} is not in 'ReadyForProcessing' status")

if (inboxMessage.processedBy == null)

throw UserServiceException("'processedBy' field should be set for an inbox message with id=${inboxMessage.id}")

try {

incomingEventService.process(inboxMessage.type, inboxMessage.payload)

inboxMessage.status = InboxMessageEntity.Status.Completed

} catch (e: Exception) {

log.error("Exception while processing an incoming event (type=${inboxMessage.type}, payload=${inboxMessage.payload})", e)

inboxMessage.status = InboxMessageEntity.Status.Error

inboxMessage.error = e.stackTraceToString()

}

inboxMessageRepository.save(inboxMessage)

}The configuration of transaction management specifies that:

-

the method should be wrapped in a new transaction

-

RuntimeExceptionand its subclasses must not cause a transaction rollback

I wrapped incomingEventService.process method call in try/catch to prevent possibility of endless number of attempts to process an inbox message;

otherwise, in case of an exception in that method, the status of the inbox message would never change and always be ReadyForProcessing. The considered

implementation makes only one attempt to process a message.

Next, InboxMessageService delegates processing of a business event inside a message (inboxMessage.payload) to

IncomingEventService:

@Transactional(propagation = Propagation.NOT_SUPPORTED)

override fun process(eventType: EventType, payload: JsonNode) {

log.debug("Start processing an incoming event: type={}, payload={}", eventType, payload)

when (eventType) {

BookCreated -> processBookCreatedEvent(getData(payload))

BookChanged -> processBookChangedEvent(getData(payload))

BookDeleted -> processBookDeletedEvent(getData(payload))

BookLent -> processBookLentEvent(getData(payload))

BookLoanCanceled -> processBookLoanCanceledEvent(getData(payload))

BookReturned -> processBookReturnedEvent(getData(payload))

AuthorChanged -> processAuthorChangedEvent(getData(payload))

else -> throw UserServiceException("Event type $eventType can't be processed")

}

}The configuration of transaction management specifies that the original transaction started in InboxMessageService.process() method should be

suspended; thus, any downstream error won’t affect the original transaction.

In the considered scenario of a book update, the processing of the event looks like this:

BookChanged eventprivate fun processBookChangedEvent(currentAndPreviousState: CurrentAndPreviousState<Book>) {

val oldBook = currentAndPreviousState.previous

val newBook = currentAndPreviousState.current

val currentLoan = newBook.currentLoan

if (currentLoan != null) {

val bookDelta = deltaService.getDelta(newBook, oldBook)

val notification = notificationService.createNotification(

currentLoan.userId,

Notification.Channel.Email,

BaseNotificationMessageParams(BookChanged, newBook.name, bookDelta)

)

outboxMessageService.saveSendNotificationCommandMessage(notification, currentLoan.userId)

}

}If the book is borrowed by a user, IncomingEventService:

-

obtains a delta between current a previous states of the book

-

creates a notification for the user that includes the delta

-

stores an outbox message containing the notification command

It turns out that in the scenario under consideration, the Inbox pattern implementation is followed by the Transactional outbox pattern implementation.

Saving an outbox message is implemented in a separate transaction and looks like this:

@Service

class OutboxMessageServiceImpl(

private val outboxMessageRepository: OutboxMessageRepository,

private val objectMapper: ObjectMapper

) : OutboxMessageService {

...

@Transactional(propagation = Propagation.REQUIRES_NEW, rollbackFor = [Exception::class])

override fun saveSendNotificationCommandMessage(payload: Notification, aggregateId: Long) =

save(createOutboxMessage(AggregateType.Notification, null, SendNotificationCommand, payload))

private fun <T> createOutboxMessage(aggregateType: AggregateType, aggregateId: Long?, type: EventType, payload: T) = OutboxMessage(

aggregateType = aggregateType,

aggregateId = aggregateId,

type = type,

topic = outboxEventTypeToTopic[type] ?: throw UserServiceException("Can't determine topic for outbox event type `$type`"),

payload = objectMapper.convertValue(payload, JsonNode::class.java)

)

private fun <T> save(outboxMessage: OutboxMessage<T>) {

log.debug("Start saving an outbox message: {}", outboxMessage)

outboxMessageRepository.writeOutboxMessageToWalInsideTransaction(outboxMessage)

}

}The considered implementation of the Transactional outbox pattern has two changes compared to the one considered in Book service section:

-

the transaction includes only one operation

In this scenario, only an outbox message is stored during the transaction, and it is not needed to store any "business" data (unlike

book-servicethat also stores data about books and authors). Of course, you can include any change of any business entity in the transaction if that is required by your use case. -

an outbox message (serialized to JSON) is stored directly in the WAL

Therefore, the outbox table is not needed. This is done using

pg_logical_emit_message()Postgres system function.

To send an outbox message directly to the WAL, I implemented the following repository:

@Repository

class OutboxMessageRepository(

private val entityManager: EntityManager,

private val objectMapper: ObjectMapper

) {

fun <T> writeOutboxMessageToWalInsideTransaction(outboxMessage: OutboxMessage<T>) {

val outboxMessageJson = objectMapper.writeValueAsString(outboxMessage)

entityManager.createQuery("SELECT pg_logical_emit_message(true, 'outbox', :outboxMessage)")

.setParameter("outboxMessage", outboxMessageJson)

.singleResult

}

}This and other possible applications of pg_logical_emit_message() function are considered in this

blog post. We will discuss WAL and how it works in detail in the Persistence layer section.

Both approaches to implementing the Transactional outbox pattern result in a message appearing in the WAL, so both approaches can be used in conjunction with a Debezium source connector. Let’s look at the main differences between them:

-

the approach using the

outboxtable:-

allows you to control how long outgoing messages are stored in the table.

In this project, as shown in Book service section, the messages are deleted right after their creation, therefore, in both cases, you won’t find a message in the database. But I don’t rule out that you might need to store outgoing messages for some time, for example, for troubleshooting, monitoring, or distributed tracing purposes: this can help you to track a message path in the distributed system.

-

-

the approach using direct writes to the WAL:

-

avoids any housekeeping needs.

Specifically, there is no need for any maintenance of the

outboxtable and removal of the messages after they have been consumed from the WAL. -

emphasizes the nature of an outbox being an append-only medium: messages must never be modified after being added to the outbox, which might happen by accident with the table-based approach.

-

if you are using a database other than PostgreSQL, you should check if your database supports direct writes of messages to its write-ahead log as well as discover whether Debezium can process such messages.

-

If you use the latter approach, you need to define a common message format for all the emitted events, since there is no table schema that a message must

conform to. You can, for example, derive that format from a set of outbox table

columns. I implement OutboxMessage DTO to have a structure

similar to the outbox table considered in the previous section:

data class OutboxMessage<T>(

val id: UUID = UUID.randomUUID(),

val aggregateType: AggregateType,

val aggregateId: Long?,

val type: EventType,

val topic: String,

val payload: T

)The DTO has an additional topic field that determines the last part of a topic name to which a message should be sent;

it

can be either notifications or rollback depending on a message type so a resulting topic name derived by the

appropriate connector will be either

library.notifications or library.rollback.

In this project, the order of inbox messages processing is not the main concern: it is supposed that the main library entities such as books and authors

are not changed very often. But if in your case it is important to process inbox message in the same order in which they were produced, you need to

do your own research and change the algorithm. While we assume that the order is preserved when the messages from outbox table of book database

are passed to inbox table of user database (Kafka guarantees the order within a partition, and it is supposed that both source and sink connectors

also do it), we need to ensure that the inbox messages are processed by user-service in the same order. For example, in the considered

scenario, how can we ensure that two notifications are created by user-service in the same order in which two updates of the same book occurred

in book-service (let’s suppose that the updates occurred almost simultaneously)? First, in this case, the processing of the inbox messages must

not be parallelized neither between multiple instances of the service nor between multiple threads inside an instance. For example, if we don’t

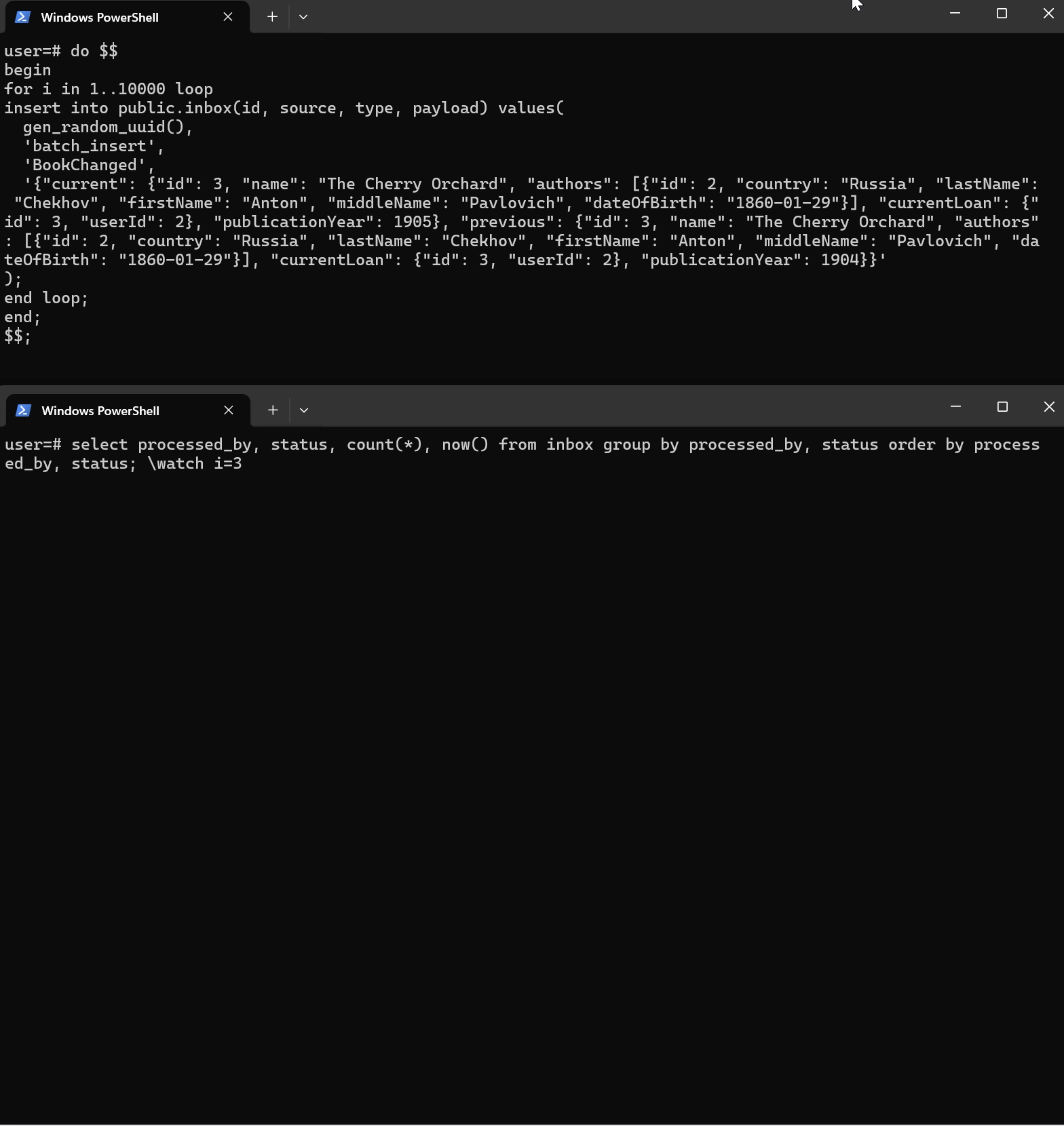

meet the first condition, and the contents of the inbox table looks like this:

it is not guaranteed that uuid1 message will be processed by one instance before uuid51 message will be processed by another instance (considering

the batch size parameter is 50). Therefore, it is possible that notifications will be delivered to a user that borrowed Book 1 in a different order than

the original.

The same logic applies to the case when one instance processes inbox messages about changes in the same book in two threads.

Therefore, both updates of the same book should be processed by the same instance and sequentially. To do that, considering that we are using Kafka,

we should use the same mechanism by which it is guaranteed that messages within a Kafka partition are inserted into inbox table in the same order in

which they were inserted into outbox table. It is keys of Kafka messages. The produced Kafka messages about two updates should have the same key;

for that, I use id of the book by configuring Outbox Event Router

in book.source connector (see

transforms.outbox.table.field.event.key property) that will be discussed in detail in the Connectors configuration section. We can add event_key

column to inbox table and, use it in where clause when selecting messages to process by a service instance, and then process messages with the same

key sequentially.

If you understand that your service will not process a large number of messages, and one instance of the service is enough for that, you might consider processing all new messages (that is, from all Kafka partitions of a topic) sequentially by that instance.

Messages of other types are processed similarly, but it should be noted who is notified in different cases:

-

BookCreated,BookDeletedtypes — all users of the library -

BookLent— a user that borrowed the book -

BookLoanCanceled— a user that tried to borrow the book but that was canceled -

BookReturned— a user that returned the book -

AuthorChanged— users who borrowed books written by the author

Let’s talk about how to initiate a compensating transaction for the scenario discussed in the previous section. Remember, when the user-service

receives a message of BookLent type containing userId value that doesn’t exist in its library_user table it should publish a message of

RollbackBookLentCommand type that should initiate execution of a compensating transaction in book-service that is to rollback somehow the creation

of a BookLoan entity. There is nothing unusual in the implementation of this scenario: the service performs user check and if a user is not found, a

message of RollbackBookLentCommand type is stored in the outbox table (in the same way as is stored a command to send a notification):

private fun processBookLentEvent(book: Book) {

...

val user = userService.getById(bookLoan.userId)

// book can't be borrowed because the user doesn't exist

if (user == null) {

outboxMessageService.saveRollbackBookLentCommandMessage(book)

}

...

}I don’t remove the messages from inbox table immediately after the processing because I think, there are cases when you may need a message some time after

processing, for example, to repeat it or investigate an error. But definitely, after some time, you can delete the message; for that, you may need to

implement separate house-keeping process to stop the table from growing indefinitely.

In this project, formatting the user notification is done using kotlinx.html library. The library allows

to create

an HTML document along with custom CSS that further, in notification-service, can be sent to a user’s email or over WebSocket. We will see that

formatting in the Testing section.

Note: when considering book-service we have seen that there is an alternative to the connector to pass messages from outbox table of

book database to Kafka. Similarly, an alternative to pass messages from Kafka to inbox table of user database is to create a component

in user-service that listens an appropriate Kafka topic and puts messages from the topic to the inbox table.

Notification service

This section covers:

-

delivery of notifications to users, specifically, through WebSocket

-

implementation of the UI for receiving the notifications

This service receives commands to send notifications and delivers them to users through email or, for demo purposes, through

WebSocket. As well as book-service and user-service, notification-service is an example of implementation of

Inbox and Saga (as the final participant) patterns; but unlike those services, it doesn’t need Transactional outbox pattern. The implementation of these

and other event-driven architecture patterns is a key part of microservices implementation in this project and was considered in Book service and User

service sections. So in this section, we will focus on the message delivery, specifically, through WebSocket, because the

email delivery

is quite straightforward.

If you want to test the email delivery, you need to:

-

use

testprofileThe setting up of a Spring profile is discussed in the Build section; briefly, you need to:

-

configure

ProcessAotGradle task in the build script addingtestprofile -

specify the profile as environment variable for the service

-

-

provide SMTP properties for Gmail or other email provider in the testing environment config

Please note that email delivery implementation is only for testing purposes; it sends an email to the sender.

WebSocket is an application level protocol providing full-duplex communication channels over a single TCP connection that is using TCP as transport layer. STOMP is a simple text-based messaging protocol that can be used on top of the lower-level WebSocket.

To implement WebSocket server, first, we need to add the org.springframework.boot:spring-boot-starter-websocket dependency to

the build script.

In this project, WebSocket is only used to notify users about some changes in the books in the library, and we don’t need to receive messages from users that is the messaging is unidirectional. The application is configured to enable WebSocket and STOMP messaging as follows:

@Configuration

@EnableWebSocketMessageBroker

class WebSocketConfig : WebSocketMessageBrokerConfigurer {

override fun registerStompEndpoints(registry: StompEndpointRegistry) {

registry.addEndpoint("/ws-notifications").setAllowedOriginPatterns("*").withSockJS()

}

override fun configureMessageBroker(config: MessageBrokerRegistry) {

config.enableSimpleBroker("/topic")

}

}WebSocketConfig is annotated with @Configuration to indicate that it is a Spring configuration class and with @EnableWebSocketMessageBroker

that enables WebSocket message handling, backed by a message broker. The registerStompEndpoints method registers the /ws-notifications endpoint,

enabling SockJS fallback options so that alternate transports can be used if WebSocket is not available. The

SockJS client will attempt to connect to /ws-notifications and use the best available transport (WebSocket, XHR streaming, XHR polling, and so

on). The configureMessageBroker method implements the default method in WebSocketMessageBrokerConfigurer to configure the message broker. It

starts by calling enableSimpleBroker to enable a simple memory-based message broker to carry the messages on destinations prefixed with /topic.

A command to send notification from the inbox table is processed as follows:

private fun processSendNotificationCommand(notification: Notification) {

when (notification.channel) {

Notification.Channel.Email -> {

emailService.send(notification.recipient, notification.subject, notification.message)

}

else -> throw NotificationServiceException("Channel is not supported: {${notification.channel.name}}")

}

}At any environment except

test,

the emailService is an instance of

EmailServiceWebSocketStub:

SimpMessagingTemplate@Service

class EmailServiceWebSocketStub(

private val simpMessagingTemplate: SimpMessagingTemplate

) : EmailService {

private val log = LoggerFactory.getLogger(this.javaClass)

override fun send(to: String, subject: String, text: String) {

// convert back to Notification

val notification = Notification(Notification.Channel.Email, to, subject, text, LocalDateTime.now())

simpMessagingTemplate.convertAndSend("/topic/library", notification)

log.debug("A message over WebSocket has been sent")

}

}The WebSocket client is a part of the user interface that was developed within the notification-service for demo purposes. The client

receives messages the sending of which was shown in the previous section; the messages are displayed in the UI.

The following dependencies are used to create the UI:

implementation("org.webjars:webjars-locator-lite")

implementation("org.webjars:sockjs-client:$sockjsClientVersion")

implementation("org.webjars:stomp-websocket:$stompWebsocketVersion")

implementation("org.webjars:bootstrap:$bootstrapVersion")

implementation("org.webjars:jquery:$jqueryVersion")By default, any resources with a path in /webjars/** are served from JAR files if they are packaged in the WebJars format. The

org.webjars:webjars-locator-lite dependency is used to specify

version agnostic URLs for WebJars.

The /resources/static

folder of the service contains the resources for the frontend application: app.js, index.html, and main.css. The most interesting is

app.js:

var stompClient = null;

function setConnected(connected) {

$("#connect").prop("disabled", connected);

$("#disconnect").prop("disabled", !connected);

if (connected) {

$("#notifications").show();

}

else {

$("#notifications").hide();

}

$("#messages").html("");

}

function connect() {

var socket = new SockJS('/ws-notifications');

stompClient = Stomp.over(socket);

stompClient.connect({}, function (frame) {

setConnected(true);

console.log('Connected: ' + frame);

stompClient.subscribe('/topic/library', function (notification) {

showNotification(JSON.parse(notification.body));

});

});

}

function disconnect() {

if (stompClient !== null) {

stompClient.disconnect();

}

setConnected(false);

console.log("Disconnected");

}

function showNotification(notification) {

let message = `

<tr><td>

<div><b>To: ${notification.recipient} | Subject: ${notification.subject} | Notification created at: ${parseTime(notification.createdAt)}</b></div>

<br>

<div>${notification.message}</div>

</td></tr>`;

$("#messages").append(message);

}

function parseTime(dateString) {

return new Date(Date.parse(dateString)).toLocaleTimeString();

}

$(function () {

$("form").on('submit', function (e) {

e.preventDefault();

});

$("#connect").click(function() { connect(); });

$("#disconnect").click(function() { disconnect(); });

});The main pieces of this JavaScript file to understand are connect and showNotification functions.

The connect function uses SockJS and stomp.js (these libraries are imported in

index.html)

to open a connection to /ws-notifications (STOMP over WebSocket endpoint configured in the previous section). Upon a successful connection,

the client subscribes to the /topic/library destination, where the service publishes notifications to all users. When a notification is

received on that destination, it is appended to the DOM:

Notification delivery will be demonstrated in the Testing section.

It would be also possible to set up email streaming from the database to some email provider (in this project, it is Gmail). It could

be done, for example, by storing notifications in outbox table of the considered service and setting up two connectors: Debezium

PostgreSQL connector that would read messages containing the notifications from outbox table and send them to Kafka and some Email

sink connector that would put notification to the provider users' inboxes. I decided though that it would be over-engineering and

current implementation is enough for this project.

Build

This section covers how to set up the following:

-

building a native image of Spring Boot application and an appropriate Docker image containing the native image

-

reflection hints for the native image

-

a Spring profile for the native image

-

health checks for the Docker image

GraalVM native images are standalone executables that can be generated by processing compiled Java applications ahead-of-time. Native images generally have a smaller memory footprint and start faster than their JVM counterparts.

The Spring Boot Gradle plugin automatically configures

AOT tasks when the Gradle Plugin for GraalVM Native Image is

applied. In such case, bootBuildImage task will generate a native image rather than a JVM one.

While there are many benefits from using native images, please note:

-

GraalVM imposes a number of constraints and making your application (in particular, a Spring Boot microservice), a native executable might require a few tweaks.

Particularly, the Spring @Profile annotation and profile-specific configuration have limitations.

-

building a native image of a Spring Boot microservice takes much more time than a JVM one.

As an example, you can look at execution times of builds of three microservices using this GitHub Actions workflow. On my machine, it takes about 4 minutes to rebuild all three microservices in parallel with Gradle.

Building a standalone binary with the native-image tool takes place under a closed world assumption (see

Native Image docs). The native-image tool performs a static analysis to

see which classes, methods, and fields within your application are reachable and must be included in the native image. The analysis

cannot always exhaustively predict all uses of dynamic features of Java, specifically, it can only partially detect application

elements that are accessed using the Java Reflection API. So, you need to provide it with details about reflectively accessed classes,

methods, and fields. In this project, it is done by Spring AOT engine that generates hint files containing

reachability metadata — JSON data that describes how GraalVM

should deal with things that it can’t understand by directly inspecting the code.

To provide my own

hints, I register some classes and their public methods and constructors for reflection explicitly:

class CommonRuntimeHints : RuntimeHintsRegistrar {

override fun registerHints(hints: RuntimeHints, classLoader: ClassLoader?) {

hints.reflection()

// required for JSON serialization/deserialization

.registerType(Book::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

.registerType(Author::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

.registerType(BookLoan::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

.registerType(CurrentAndPreviousState::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

.registerType(Notification::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

.registerType(OutboxMessage::class.java, MemberCategory.INVOKE_PUBLIC_METHODS, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

// required to persist entities

// TODO: remove after https://hibernate.atlassian.net/browse/HHH-16809

.registerType(Array<UUID>::class.java, MemberCategory.INVOKE_DECLARED_CONSTRUCTORS)

}

}In the future, that approach may change.

Then, CommonRuntimeHints is imported by all microservices, for example:

CommonRuntimeHints usage@SpringBootApplication

@ImportRuntimeHints(CommonRuntimeHints::class)

@EnableScheduling

class BookServiceApplicationIn this project, generated hint files can be found in <service-name>/build/generated/aotResources folder. You can see that besides

the custom reflection hints it also contains automatically generated hints, for example, for the resources that are needed for the

microservices such as configuration (application.yaml) and database migration (/db/migration/*) files.

To build a Docker image containing a native image of Spring Boot application, you need to execute ./gradlew :<service name>:bootBuildImage

in the root directory of the project. On Windows, you can use

this script to rebuild all three microservices and restart the

whole project. The script contains commands for parallel and sequential building of all microservices. Please note that using this approach you

don’t need Dockerfile for any microservice.

Let’s consider the build configuration on the example of book-service: the command is gradlew :book-service:bootBuildImage. bootBuildImage

is a task provided by Spring Boot Gradle Plugin (org.springframework.boot); under the hood it uses Gradle Plugin for GraalVM Native Image

(org.graalvm.buildtools.native) to generate the native image (rather than a JVM one) and packs it into

OCI image using Cloud Native Buildpacks (CNB).

The build config of the service looks like this:

book-service build configurationplugins {

id("org.springframework.boot")

...

id("org.graalvm.buildtools.native")

...

}

tasks.withType<ProcessAot> {

args = mutableListOf("--spring.profiles.active=test")

}

tasks.withType<BootBuildImage> {

buildpacks = setOf("paketobuildpacks/java-native-image", "paketobuildpacks/health-checker")

environment = mapOf("BP_HEALTH_CHECKER_ENABLED" to "true")

imageName = "$dockerRepository:${project.name}"

}In the config of BootBuildImage task, I set:

-

list of buildpacks

By default, the builder uses buildpacks included in the builder image and apply them in a pre-defined order. I need to provide

buildpacksexplicitly to enable health checker in the resulting image.java-native-imagebuildpack allows users to create an image containing a GraalVM native image application.health-checkerbuildpack allows users to enable health checker in the resulting image (usage of health checks will be shown later in this section). -

environment variables

-

BP_HEALTH_CHECKER_ENABLEDspecifies whether a Docker image should contain health checker binary.

-

-

image name

dockerRepositoryisdocker.io/kudryashovroman/event-driven-architectureso the result string isdocker.io/kudryashovroman/event-driven-architecture:book-service; it consists of:-

Docker Hub URL

-

my username on Docker Hub

-

name of the repository

-

tag of the service (images of all services are pushed to the same repository with different tags)

-

You can find more properties to customize an image in the docs.

Of course, you can still build a Docker image of a regular JVM-based application by applying an appropriate build configuration which can help you to reduce

build time of the services and thus speed up local development. A quick way to do that is to remove org.graalvm.buildtools.native from